Chapter 1: Introduction to Rust and Actix Web

Introduction to Rust language

Rust is a statically-typed, systems-level programming language designed for performance, reliability, and safety. It was developed by Mozilla and released in 2010. Rust’s syntax is similar to that of C++, but it has a number of unique features that set it apart from other languages, such as a strong type system, ownership and borrowing, and zero-cost abstractions.

Rust’s strong type system catches many programming errors at compile-time, helping to reduce bugs and improve code quality. Ownership and borrowing help to ensure memory safety and prevent common programming errors such as null pointer dereferences and data races. Rust also provides zero-cost abstractions, which allow developers to write high-level, expressive code that is as fast and efficient as low-level code.

Rust has gained popularity in recent years, particularly in the systems programming community, due to its focus on performance and safety. It is used in a wide range of applications, including web services, game engines, and operating systems. Introduction to Actix Web framework Actix Web is a powerful, high-performance web framework for Rust, designed for building asynchronous, non-blocking web services. It is built on top of the Actix actor framework and provides a robust set of features for building scalable, reliable, and efficient web applications.

Actix Web is designed with performance in mind, using Rust’s unique features to provide a highly efficient runtime. It uses asynchronous IO and non-blocking APIs to handle a large number of concurrent connections with minimal overhead. This makes it an excellent choice for building web services that need to handle high levels of traffic and requests.

Actix Web provides a wide range of features, including routing, middleware, request and response handling, web sockets, and much more. It also supports a variety of data formats, including JSON, XML, and YAML. Actix Web is highly extensible, allowing developers to add custom middleware, extractors, and handlers to tailor it to their specific needs.

Overall, Actix Web is a powerful, flexible, and efficient web framework that is well-suited for building high-performance web services in Rust. Advantages of using Rust and Actix Web for backend development Using Rust and Actix Web for backend development offers several advantages:

-

Performance: Rust is designed for high-performance systems-level programming, and Actix Web builds on this by providing an efficient, asynchronous, non-blocking runtime. This makes it an excellent choice for building high-performance web services that can handle large amounts of traffic and requests.

-

Safety: Rust’s strong type system, ownership and borrowing, and zero-cost abstractions help to ensure memory safety and prevent common programming errors such as null pointer dereferences and data races. This makes Rust code less prone to security vulnerabilities and bugs, which is particularly important for backend systems that handle sensitive data.

-

Scalability: Actix Web is designed to be highly scalable, using asynchronous IO and non-blocking APIs to handle a large number of concurrent connections with minimal overhead. This makes it well-suited for building web services that need to handle high levels of traffic and requests.

-

Flexibility: Actix Web is highly flexible and extensible, allowing developers to add custom middleware, extractors, and handlers to tailor it to their specific needs. This gives developers the freedom to build backend systems that are highly customized and optimized for their specific use case.

Overall, using Rust and Actix Web for backend development provides a powerful combination of performance, safety, scalability, and flexibility that makes it an excellent choice for building modern web services. Setting up development environment To set up a development environment for Rust and Actix Web, follow these steps:

-

Install Rust: Rust can be installed using rustup, which is a command-line tool that installs and manages Rust. Follow the instructions on the Rust website to install rustup for your operating system.

-

Install a code editor: Rust code can be written in any text editor, but it is recommended to use a code editor with Rust support, such as Visual Studio Code, IntelliJ IDEA, or Atom. Install your preferred code editor and any necessary plugins or extensions.

-

Create a new Rust project: Open a terminal or command prompt and create a new Rust project using the cargo command-line tool. Navigate to the directory where you want to create the project and run the following command:

cargo new myproject --binThis will create a new Rust project named “myproject” with a binary executable target. The --bin flag indicates that we want to create a binary executable rather than a library.

- Add Actix Web as a dependency: Open the

Cargo.tomlfile in your project directory and add Actix Web as a dependency:

[dependencies]

actix-web = "4.0.0"This will tell Cargo to download and include Actix Web in your project.

-

Write some code: Open the

src/main.rsfile in your project directory and start writing some code. You can use the Actix Web documentation to learn how to use the framework and write your own web services. -

Build and run your project: To build your project, navigate to your project directory in a terminal or command prompt and run the following command:

cargo buildThis will compile your project and generate a binary executable in the target/debug directory. To run your project, run the following command:

cargo runThis will start your web service on the default port (usually 8080). You can access it by opening a web browser and navigating to http://localhost:8080.

That’s it! You now have a development environment set up for Rust and Actix Web.

Understanding HTTP and RESTful APIs

HTTP (Hypertext Transfer Protocol) is a protocol used for sending and receiving information over the internet. It is the foundation of the World Wide Web and is used by web browsers and servers to communicate with each other.

REST (Representational State Transfer) is an architectural style for building web services that use HTTP as the underlying protocol. RESTful APIs (Application Programming Interfaces) are web services that follow the principles of REST, and are designed to be simple, scalable, and easy to use.

A RESTful API is built around resources, which are the objects or concepts that the API exposes to the client. Each resource has a unique URI (Uniform Resource Identifier), and clients can perform various operations on the resource using HTTP methods such as GET, POST, PUT, PATCH, and DELETE.

Here are the common HTTP methods used in RESTful APIs:

- GET: Used to retrieve a resource from the server

- POST: Used to create a new resource on the server

- PUT: Used to update an existing resource on the server

- PATCH: Used to partially update an existing resource on the server

- DELETE: Used to delete a resource from the server

In a RESTful API, resources are represented using a standardized data format, such as JSON (JavaScript Object Notation) or XML (Extensible Markup Language). This makes it easy for clients to understand and use the API, regardless of the programming language or platform they are using.

Overall, RESTful APIs are a simple, scalable, and flexible way to build web services that can be used by a wide variety of clients. By following the principles of REST and using HTTP as the underlying protocol, developers can build powerful and efficient web services that are easy to use and maintain. Overview of the Actor Model and its implementation in Actix The Actor Model is a programming model for building concurrent and distributed systems. It was first proposed by Carl Hewitt in the 1970s and has since been used in various languages and frameworks.

In the Actor Model, computation is organized around “actors”, which are independent units of computation that communicate with each other by sending and receiving messages. Actors can be thought of as “miniature machines” that execute code and maintain their own internal state.

Each actor has a unique identity and can receive messages from other actors. When an actor receives a message, it can perform some computation and send messages to other actors. Actors can also create new actors, which can in turn receive and send messages.

Actix is a Rust framework that implements the Actor Model for building high-performance web services. In Actix, actors are implemented as asynchronous Rust functions, which can communicate with each other using messages.

Actors in Actix are organized into a hierarchy, where each actor can have child actors that it supervises. This allows for the creation of fault-tolerant systems, where errors in one actor can be handled by its parent actor.

Actix also provides an actor-based approach to handling HTTP requests, where each incoming request is handled by a separate actor. This allows for efficient handling of large numbers of concurrent requests, as each request is handled independently by a dedicated actor.

Overall, the Actor Model provides a powerful and flexible way to build concurrent and distributed systems, and Actix provides a Rust-based implementation of the Actor Model that is optimized for high-performance web services.

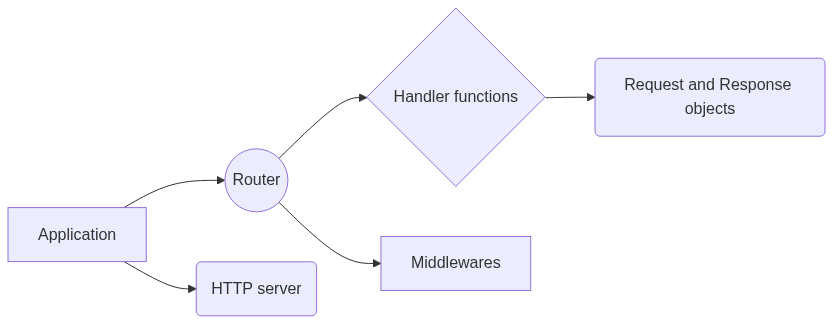

Exploring Actix Web’s architecture and core components

Actix Web is a high-performance web framework built on top of Actix, which is an actor framework for Rust. Actix Web provides a number of core components and architectural patterns that make it easy to build scalable and efficient web services.

Here are some of the core components of Actix Web:

-

Application: The Application is the main entry point for Actix Web, and it is responsible for managing the server, handling incoming requests, and routing requests to the appropriate handlers.

-

Router: The Router is responsible for mapping incoming requests to the appropriate handler functions. It allows for flexible URL matching and supports various HTTP methods, such as GET, POST, PUT, DELETE, etc.

-

Handler functions: Handler functions are Rust functions that are responsible for processing incoming requests and generating responses. They take a request object as input and return a response object as output.

-

Middleware: Middleware functions are functions that are executed before or after the handler functions, and can modify the request and response objects. Middleware functions can be used to implement authentication, rate limiting, logging, and other cross-cutting concerns.

-

Request and Response objects: The Request object represents an incoming HTTP request, and contains information such as the HTTP method, URL, headers, and body. The Response object represents an outgoing HTTP response, and contains information such as the status code, headers, and body.

-

HTTP server: Actix Web provides a high-performance HTTP server that is built on top of the Tokio runtime, which allows for efficient handling of large numbers of concurrent requests.

Actix Web’s architecture is designed to be highly modular and flexible, allowing developers to easily customize and extend the framework to meet their specific needs. By leveraging Rust’s strong type system and efficient runtime, Actix Web provides a powerful and efficient platform for building high-performance web services. Writing and testing your first Actix Web application To write and test your first Actix Web application, you will need to follow these steps:

-

Set up your development environment: Install Rust and Cargo on your system, and create a new Rust project using Cargo.

-

Add Actix Web as a dependency: Add Actix Web to your Cargo.toml file as a dependency, and run

cargo buildto download the necessary dependencies. -

Create a simple handler function: Write a Rust function that takes a

HttpRequestobject as input and returns aHttpResponseobject as output. This function will handle incoming HTTP requests. -

Register the handler function with the router: In your

main.rsfile, use theAppobject to register the handler function with the router, and specify the URL path and HTTP method that should trigger the handler. -

Start the server: Use the

Appobject to start the HTTP server, which will listen for incoming requests and route them to the appropriate handler function. -

Test the application: Use a web browser or a tool like cURL to send an HTTP request to your application and verify that the response is what you expected.

Here’s an example of what the code might look like:

use actix_web::{get, web, App, HttpResponse, HttpServer, Responder};

#[get("/hello/{name}")]

async fn hello(name: web::Path<String>) -> impl Responder {

let message = format!("Hello, {}!", name);

HttpResponse::Ok().body(message)

}

#[actix_rt::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| {

App::new()

.service(hello)

})

.bind("127.0.0.1:8080")?

.run()

.await

}In this example, we define a simple handler function called hello that takes a name parameter from the URL path, and returns a greeting message that includes the name. We then use the App object to register the hello function with the router, and start the HTTP server on port 8080.

To test the application, you can open a web browser and navigate to http://localhost:8080/hello/yourname, where yourname is the name you want to be greeted with. You should see a web page that displays the message “Hello, yourname!“.

By following these steps, you can quickly build and test your first Actix Web application, and start exploring the capabilities of this powerful web framework.

Working with asynchronous programming and futures in Rust

Asynchronous programming and futures are core concepts in Rust and Actix Web, and they are essential for building high-performance web services.

In Rust, asynchronous programming is implemented using the async and await keywords, which allow functions to be executed concurrently without blocking the main thread. Instead of waiting for a function to complete before moving on to the next one, the main thread can continue executing other tasks, and return to the original task when it is ready to proceed.

Futures are a Rust abstraction that represent a computation that may not be complete yet, but will eventually produce a result. Futures can be combined using combinators like map, and_then, and join, allowing for complex and efficient asynchronous workflows.

Here’s an example of how to use asynchronous programming and futures in Actix Web:

use actix_web::{get, web, App, HttpResponse, HttpServer, Responder};

use futures::future::join;

async fn task1() -> String {

// do some asynchronous work

"Hello".to_string()

}

async fn task2() -> String {

// do some other asynchronous work

"world!".to_string()

}

#[get("/hello")]

async fn hello() -> impl Responder {

let (result1, result2) = join(task1(), task2()).await;

let message = format!("{} {}", result1, result2);

HttpResponse::Ok().body(message)

}

#[actix_rt::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| {

App::new()

.service(hello)

})

.bind("127.0.0.1:8080")?

.run()

.await

}In this example, we define two asynchronous tasks (task1 and task2) that perform some work and return a String result. We then use the join combinator to execute both tasks concurrently and wait for both results to be available.

In the hello handler function, we call the join function to execute both tasks asynchronously, and wait for both results to be available. We then combine the two results into a single message and return it as an HTTP response.

By using asynchronous programming and futures, we can take advantage of the performance benefits of concurrent execution without blocking the main thread, allowing our web services to handle large numbers of concurrent requests efficiently.

Managing application state using Actix Web’s built-in data structures

Actix Web provides several built-in data structures that can be used to manage application state. These data structures are designed to be shared between different parts of the application, such as handlers and middleware, and can be accessed using Actix Web’s web module.

Here are some of the built-in data structures provided by Actix Web:

web::Data<T>: This is a container that holds application state of typeT. It can be used to store any type that implements theSendtrait, which means it can be safely accessed from multiple threads. TheDatacontainer is shared between all requests, so any changes made to it will be visible to all subsequent requests.

use actix_web::{web, App, HttpResponse, HttpServer};

struct AppState {

message: String,

}

async fn hello(state: web::Data<AppState>) -> HttpResponse {

HttpResponse::Ok().body(state.message.clone())

}

#[actix_rt::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| {

App::new()

.data(AppState { message: "Hello, world!".to_string() })

.service(web::resource("/hello").to(hello))

})

.bind("127.0.0.1:8080")?

.run()

.await

}In this example, we define a custom AppState struct that holds an application message. We then use the App::data method to store an instance of AppState in the application state. In the hello handler function, we use the web::Data container to retrieve the AppState instance and access its message.

web::Json<T>: This is a container that holds JSON data that has been deserialized into a Rust struct of typeT. It can be used to automatically deserialize JSON data from HTTP requests and serialize Rust structs into JSON responses.

use serde::{Deserialize, Serialize};

#[derive(Deserialize, Serialize)]

struct Greeting {

name: String,

message: String,

}

async fn hello(greeting: web::Json<Greeting>) -> HttpResponse {

let response = format!("{} {}", greeting.message, greeting.name);

HttpResponse::Ok().body(response)

}In this example, we define a Greeting struct that holds a name and message. We then use the web::Json container in the hello handler function to automatically deserialize JSON data from the request body into a Greeting instance. We then use the Greeting instance to build an HTTP response.

web::Path<T>: This is a container that holds a parameter extracted from the URL path. It can be used to extract URL parameters from the request path and pass them as parameters to handler functions.

async fn hello(name: web::Path<String>) -> HttpResponse {

let response = format!("Hello, {}!", name);

HttpResponse::Ok().body(response)

}

#[actix_rt::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| {

App::new()

.service(web::resource("/hello/{name}").route(web::get().to(hello)))

})

.bind("127.0.0.1:8080")?

.run()

.await

}In this example, we use the web::Path container to extract a parameter named name from the URL path. We then use the parameter to build an HTTP response.

By using Actix Web’s built-in data structures, we can manage application state in a safe and efficient way, and

reduce the amount of boilerplate code needed to handle common tasks such as deserializing JSON data or extracting parameters from the URL path.

web::Query<T>: This is a container that holds a parameter extracted from the query string. It can be used to extract query string parameters from the request URL and pass them as parameters to handler functions.

async fn hello(query: web::Query<HashMap<String, String>>) -> HttpResponse {

let name = query.get("name").unwrap_or(&"World".to_string());

let response = format!("Hello, {}!", name);

HttpResponse::Ok().body(response)

}

#[actix_rt::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| {

App::new()

.service(web::resource("/hello").route(web::get().to(hello)))

})

.bind("127.0.0.1:8080")?

.run()

.await

}In this example, we use the web::Query container to extract a query parameter named name from the request URL. If the parameter is not present, we use a default value of “World”. We then use the parameter to build an HTTP response.

web::Form<T>: This is a container that holds form data that has been deserialized into a Rust struct of typeT. It can be used to automatically deserialize form data from HTTP requests and serialize Rust structs into form-encoded responses.

use serde::{Deserialize, Serialize};

#[derive(Deserialize, Serialize)]

struct Greeting {

name: String,

message: String,

}

async fn hello(greeting: web::Form<Greeting>) -> HttpResponse {

let response = format!("{} {}", greeting.message, greeting.name);

HttpResponse::Ok().body(response)

}In this example, we define a Greeting struct that holds a name and message. We then use the web::Form container in the hello handler function to automatically deserialize form data from the request body into a Greeting instance. We then use the Greeting instance to build an HTTP response.

By using Actix Web’s built-in data structures, we can manage application state in a safe and efficient way, reduce the amount of boilerplate code needed to handle common tasks, and improve the readability and maintainability of our code.

Implementing authentication and authorization in Actix Web

Implementing authentication and authorization is an important aspect of building secure web applications. Actix Web provides several mechanisms for implementing authentication and authorization, including middleware and guards.

Middleware in Actix Web is a way to modify the request or response before it reaches the handler function. This can be used to implement cross-cutting concerns such as authentication and authorization.

Guards are used to ensure that a request meets certain criteria before it reaches the handler function. For example, a guard can be used to ensure that a user is authenticated before allowing them to access a protected resource.

Actix Web provides several middleware and guards that can be used to implement authentication and authorization:

-

actix_web::middleware::Logger: This middleware logs incoming requests and outgoing responses. It can be used for debugging and auditing purposes. -

actix_web::middleware::Compress: This middleware compresses responses with gzip or deflate. This can reduce the size of responses and improve performance. -

actix_web::middleware::DefaultHeaders: This middleware sets default headers on responses. This can be used to implement security headers such asX-XSS-ProtectionandContent-Security-Policy. -

actix_web::middleware::Session: This middleware provides session management. It can be used to implement user authentication and authorization. -

actix_web::guard::fn_guard: This guard executes a function to determine if a request meets certain criteria. This can be used to implement custom authentication and authorization logic.

Here is an example of how to implement authentication and authorization using the actix_web::middleware::Session middleware and the actix_web::guard::fn_guard guard:

use actix_web::{guard, web, App, HttpResponse, HttpServer, Responder};

use actix_web::middleware::Session;

async fn login(session: Session) -> impl Responder {

session.set("authenticated", true).unwrap();

HttpResponse::Ok().body("You are now logged in")

}

async fn logout(session: Session) -> impl Responder {

session.remove("authenticated");

HttpResponse::Ok().body("You are now logged out")

}

async fn index(session: Session) -> impl Responder {

if let Some(true) = session.get::<bool>("authenticated").unwrap() {

HttpResponse::Ok().body("You are logged in")

} else {

HttpResponse::Unauthorized().body("You are not authorized")

}

}

#[actix_rt::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| {

App::new()

.wrap(Session::new("01234567890123456789012345678901").cookie_secure(false))

.route("/login", web::post().to(login))

.route("/logout", web::post().to(logout))

.route("/index", web::get().to(index).guard(guard::fn_guard(|req| {

req.headers().contains_key("authorization")

})))

})

.bind("127.0.0.1:8080")?

.run()

.await

}In this example, we use the Session middleware to manage user sessions. We define three handler functions: login, logout, and index. The login function sets the authenticated key in the session to true when the user logs in. The logout function removes the authenticated key from the session when the user logs out. The index function checks if the authenticated key is present in the session and returns an appropriate response.

We use the guard::fn_guard guard to ensure that the index function is only accessible to authenticated users. The guard executes a function that checks if the request contains an Authorization header. If the header is present, the function returns true, and the request is allowed to reach the index function. If the header is not present, the function returns false, and the request is rejected with a 401 Unauthorized response.

Note that this is just an example of how to implement authentication and authorization using Actix Web. The actual implementation will depend on your specific requirements and security needs.

In addition to middleware and guards, Actix Web also provides support for various authentication and authorization mechanisms such as JSON Web Tokens (JWTs), OAuth2, and Basic Auth. These can be implemented using third-party crates that integrate with Actix Web, such as actix-web-jwt, actix-web-oauth2, and actix-web-httpauth.

With Actix Web’s flexible middleware and guard system, implementing authentication and authorization in your web application can be done in a safe and efficient manner. By properly securing your application, you can protect your users’ data and build trust with your users.

Handling errors and building resilient web services

When building web services, handling errors and building resilience is critical. Actix Web provides several mechanisms to help you achieve this.

One way to handle errors is to use Actix Web’s error handling middleware. This middleware intercepts errors that occur during request processing and generates an appropriate response. The middleware can be customized to handle different types of errors, including those generated by your application code or by third-party dependencies.

Actix Web also provides support for handling errors using the Result type. By returning Result from your handlers, you can propagate errors to the error handling middleware. This allows you to handle errors in a centralized location and provide consistent error responses to clients.

In addition to error handling, Actix Web provides several features for building resilient web services. For example, Actix Web supports timeouts for requests, which can help prevent denial-of-service attacks and improve the reliability of your service. Actix Web also supports graceful shutdown, which allows your service to complete any in-flight requests before shutting down.

Actix Web also provides support for distributed tracing using the OpenTelemetry standard. This allows you to trace requests across different services and understand the performance of your entire system.

By using these features, you can build web services that are resilient to failures and provide a good experience to your users. Optimizing Actix Web applications for performance and scalability Actix Web is designed to be fast and scalable by default, but there are several techniques you can use to further optimize the performance of your applications.

One important technique is to use asynchronous programming whenever possible. Asynchronous programming allows your application to handle more requests with fewer threads, which can improve the overall throughput and scalability of your service. Actix Web provides a powerful asynchronous runtime that allows you to write highly performant and scalable code using Rust’s powerful async/await syntax.

Another technique is to use connection pooling for database connections. Actix Web provides support for connection pooling using the r2d2 crate. Connection pooling allows your application to reuse database connections instead of creating new ones for each request. This can reduce the overhead of establishing a new connection and improve the overall performance of your application.

Caching is another technique that can be used to improve the performance of your web service. Actix Web provides built-in support for caching using the actix-web::middleware::cache middleware. This middleware caches the response of a request and serves it from the cache if the same request is made again. This can significantly reduce the response time for frequently requested resources.

Actix Web also provides support for load balancing and clustering using third-party crates such as actix-web-nginx-proxy and actix-web-actors. These crates allow you to distribute the load of your application across multiple nodes and provide fault-tolerance and high availability.

Finally, profiling and benchmarking your application is an important step in optimizing its performance. Rust provides a powerful profiling and benchmarking infrastructure through the `car command-line tool. By profiling and benchmarking your application, you can identify performance bottlenecks and make targeted optimizations to improve the overall performance of your web service.

By using these techniques, you can build highly performant and scalable web services using Actix Web.

Deploying Actix Web applications to production

Deploying Actix Web applications to production involves several steps, including building the application, configuring the environment, and deploying the application to a server.

The first step is to build the application for deployment. You can do this using the cargo build --release command, which will compile the application with optimizations and generate a binary executable in the target/release directory.

Next, you need to configure the environment for the application. This includes setting environment variables for configuration options such as database connection details, secret keys, and other application-specific settings.

You also need to ensure that the server environment is properly configured for running the Actix Web application. This may include installing dependencies, configuring firewalls, and setting up SSL certificates.

Once the environment is set up, you can deploy the application to the server. There are several ways to deploy an Actix Web application, including using a reverse proxy such as Nginx or Apache, or deploying directly to a cloud provider such as AWS or Google Cloud.

When deploying the application, it’s important to ensure that it’s running in a secure and reliable manner. This includes monitoring the application for errors and performance issues, setting up automated backups and failover mechanisms, and following best practices for securing the server environment.

Finally, it’s important to test the deployed application thoroughly to ensure that it’s working correctly in the production environment. This may include testing for performance, scalability, and security issues.

By following these steps, you can deploy your Actix Web application to production with confidence, knowing that it’s running in a secure and reliable manner. Best practices for developing and maintaining Actix Web applications Here are some best practices for developing and maintaining Actix Web applications:

-

Follow the separation of concerns principle and keep your code organized into separate modules and files. This makes it easier to read, test, and maintain your code.

-

Use the Rust

Resulttype to handle errors in your code. This makes your code more robust and helps you handle errors gracefully. -

Use the

logcrate to log debug information and errors in your application. This makes it easier to debug issues and monitor the performance of your application. -

Use the

dotenvcrate to manage configuration variables in your application. This allows you to keep sensitive information such as API keys and database connection strings out of your code repository. -

Use the

actix-web-middlewarecrate to add middleware to your application. Middleware can be used to handle authentication, rate limiting, and other common tasks. -

Use the

actix-web-testcrate to write unit tests and integration tests for your application. This makes it easier to catch bugs early and ensure that your code is working correctly. -

Use version control to manage your code repository and keep track of changes over time. This allows you to roll back to previous versions if needed and collaborate with other developers more easily.

-

Follow best practices for security, such as using HTTPS, handling user input carefully to prevent SQL injection attacks, and using secure authentication and authorization mechanisms.

-

Regularly update your dependencies to ensure that you’re using the latest security patches and bug fixes.

-

Document your code and API endpoints using comments and OpenAPI specifications. This makes it easier for other developers to understand your code and build on top of your API.

By following these best practices, you can ensure that your Actix Web applications are well-designed, secure, and maintainable over time.

Chapter 2: Building RESTful APIs with Actix Web

Creating a basic RESTful API with Actix Web

To create a basic RESTful API with Actix Web, you can follow these steps:

- Start a new Rust project using Cargo:

cargo new my_api --bin- Add the Actix Web dependency to your

Cargo.tomlfile:

[dependencies]

actix-web = "3.3.2"- Create a new Rust file named

main.rsin thesrcdirectory and add the following code:

use actix_web::{get, post, web, App, HttpResponse, HttpServer, Responder};

#[get("/")]

async fn index() -> impl Responder {

HttpResponse::Ok().body("Hello world!")

}

#[post("/users")]

async fn create_user(user: web::Json<User>) -> impl Responder {

// code to create a new user

}

#[derive(serde::Deserialize)]

struct User {

name: String,

email: String,

}

#[actix_web::main]

async fn main() -> std::io::Result<()> {

HttpServer::new(|| {

App::new()

.service(index)

.service(create_user)

})

.bind("127.0.0.1:8080")?

.run()

.await

}This code defines two API endpoints: a GET endpoint at / that returns a “Hello world!” message, and a POST endpoint at /users that expects a JSON payload containing a user’s name and email address.

- Start the HTTP server by running the following command:

cargo runYour RESTful API is now up and running! You can test it by sending a GET request to http://localhost:8080/ or a POST request to http://localhost:8080/users with a JSON payload containing a user’s name and email address.

This is just a simple example of how to create a RESTful API with Actix Web. As your API grows in complexity, you can add more endpoints, use middleware to handle authentication and rate limiting, and use database libraries to interact with a database.

Handling HTTP requests and responses

Actix Web provides a set of tools to handle HTTP requests and responses in a simple and efficient way. Here are some examples:

Handling HTTP requests

Actix Web provides a set of macros to define HTTP request handlers. Here’s an example that defines a handler for a GET request to the root URL ("/"):

use actix_web::{get, HttpResponse, Responder};

#[get("/")]

async fn index() -> impl Responder {

HttpResponse::Ok().body("Hello world!")

}In this example, the get macro is used to define a handler for a GET request to the root URL. The async keyword indicates that this is an asynchronous function, and the Responder trait indicates that this function returns a type that can be converted to an HTTP response.

Inside the function, we create an HttpResponse object with a 200 OK status code and a body containing the string "Hello world!". The HttpResponse object implements the Responder trait, so we can return it directly from the function.

Handling HTTP responses

Actix Web provides a set of functions to create HTTP responses. Here’s an example that creates a 404 Not Found response:

use actix_web::{HttpResponse, http::StatusCode};

fn not_found() -> HttpResponse {

HttpResponse::NotFound()

.status(StatusCode::NOT_FOUND)

.body("404 Not Found")

}In this example, we create an HttpResponse object with a 404 Not Found status code and a body containing the string "404 Not Found". We use the status method to set the status code explicitly, even though it’s already set by the NotFound method.

We can use this function in our request handlers to return a 404 Not Found response:

use actix_web::{get, HttpResponse, Responder};

#[get("/")]

async fn index() -> impl Responder {

not_found()

}In this example, the index function returns the not_found function, which creates a 404 Not Found response.

Actix Web also provides functions to create other types of responses, such as redirects, error responses, and JSON responses. Implementing CRUD operations CRUD stands for Create, Read, Update, and Delete, which are the basic operations that can be performed on a data model. Actix Web provides a set of tools to implement CRUD operations in a RESTful API. Here’s an example of how to implement these operations using Actix Web:

use actix_web::{get, post, put, delete, web, HttpResponse, Responder};

struct Todo {

id: i32,

title: String,

completed: bool,

}

struct AppState {

todos: Vec<Todo>,

}

impl AppState {

fn new() -> Self {

AppState {

todos: Vec::new(),

}

}

}

#[get("/todos")]

async fn get_todos(state: web::Data<AppState>) -> impl Responder {

HttpResponse::Ok().json(&state.todos)

}

#[post("/todos")]

async fn create_todo(todo: web::Json<Todo>, state: web::Data<AppState>) -> impl Responder {

let id = state.todos.len() as i32;

let new_todo = Todo {

id,

title: todo.title.clone(),

completed: todo.completed,

};

state.todos.push(new_todo);

HttpResponse::Created().json(&new_todo)

}

#[put("/todos/{id}")]

async fn update_todo(

id: web::Path<i32>,

todo: web::Json<Todo>,

state: web::Data<AppState>,

) -> impl Responder {

let index = state.todos.iter().position(|t| t.id == *id);

if let Some(index) = index {

let updated_todo = Todo {

id: *id,

title: todo.title.clone(),

completed: todo.completed,

};

state.todos[index] = updated_todo;

HttpResponse::Ok().json(&updated_todo)

} else {

HttpResponse::NotFound().finish()

}

}

#[delete("/todos/{id}")]

async fn delete_todo(id: web::Path<i32>, state: web::Data<AppState>) -> impl Responder {

let index = state.todos.iter().position(|t| t.id == *id);

if let Some(index) = index {

let deleted_todo = state.todos.remove(index);

HttpResponse::Ok().json(&deleted_todo)

} else {

HttpResponse::NotFound().finish()

}

}In this example, we define a Todo struct to represent our data model, and an AppState struct to hold our application state. We define four request handlers using the get, post, put, and delete macros to handle GET, POST, PUT, and DELETE requests, respectively.

The get_todos handler returns a list of todos in the application state as a JSON array. The create_todo handler creates a new todo and adds it to the application state, and returns the new todo as a JSON object with a 201 Created status code. The update_todo handler updates an existing todo with the given ID, and returns the updated todo as a JSON object with a 200 OK status code. The delete_todo handler deletes an existing todo with the given ID, and returns the deleted todo as a JSON object with a 200 OK status code.

We use the web::Data type to pass the application state to the request handlers. The web::Json type is used to automatically deserialize the JSON request body into a Todo struct.

With these request handlers, we can perform CRUD operations on our data model using HTTP requests. For example, we can create a new todo using a POST request to /todos with a JSON body:

POST /

todos HTTP/1.1

Content-Type: application/json

{

"title": "Buy groceries",

"completed": false

}The server would then create a new todo with the provided title and completed status and return a response with the new todo’s ID:

HTTP/1.1 201 Created

Content-Type: application/json

{

"id": 1,

"title": "Buy groceries",

"completed": false

}To implement this behavior in Actix Web, we can define a new route with a handler function that reads the request body and creates a new todo in our data store:

async fn create_todo(

pool: web::Data<DbPool>,

todo: web::Json<NewTodo>,

) -> Result<HttpResponse, Error> {

let conn = pool.get()?;

let new_todo = todo.into_inner();

let todo = Todo::create(&conn, new_todo)?;

Ok(HttpResponse::Created().json(todo))

}

fn config(cfg: &mut web::ServiceConfig) {

cfg.service(

web::resource("/todos")

.route(web::post().to(create_todo))

);

}In this example, we use the web::Json extractor to parse the request body into a NewTodo struct, which we can then use to create a new Todo object in our database using the Todo::create method. We then return the created todo as JSON in the response body, along with the 201 Created status code.

We can similarly implement the other CRUD operations using the appropriate HTTP methods and route handlers. For example, to retrieve a list of all todos, we could define a route and handler like this:

async fn get_todos(pool: web::Data<DbPool>) -> Result<HttpResponse, Error> {

let conn = pool.get()?;

let todos = Todo::list(&conn)?;

Ok(HttpResponse::Ok().json(todos))

}

fn config(cfg: &mut web::ServiceConfig) {

cfg.service(

web::resource("/todos")

.route(web::get().to(get_todos))

.route(web::post().to(create_todo))

);

}In this example, we use the Todo::list method to retrieve all todos from the database and return them as JSON in the response body with the 200 OK status code.

Handling errors and status codes

In any real-world application, it’s important to handle errors and return appropriate HTTP status codes to clients. Actix Web provides several tools to help with this.

One way to handle errors is to use the Result type in our handler functions, with the ? operator to propagate errors up the call stack. For example, in our create_todo function, we return a Result<HttpResponse, Error>. This allows us to handle errors returned by our Todo::create method, as well as any errors that might occur when accessing the database connection pool, using the ? operator.

Actix Web also provides several convenience methods for returning HTTP status codes in response to client requests. For example, we can use the HttpResponse::BadRequest method to return a 400 Bad Request status code when a client sends a request with invalid data:

async fn create_todo(

pool: web::Data<DbPool>,

todo: web::Json<NewTodo>,

) -> Result<HttpResponse, Error> {

let conn = pool.get()?;

let new_todo = todo.into_inner();

if new_todo.title.is_empty() {

return Err(error::ErrorBadRequest("Title is required"));

}

let todo = Todo::create(&conn, new_todo)?;

Ok(HttpResponse::Created().json(todo))

}In this example, we check whether the title field in the NewTodo struct is empty, and return a 400 Bad Request status code with a helpful error message if it is.

We can also use the HttpResponse::NotFound method to return a 404 Not Found status code when a client requests a resource that doesn’t exist, and the HttpResponse::InternalServerError method to return a 500 Internal Server Error status code when an unexpected error occurs on the server.

async fn get_todo(

pool: web::Data<DbPool>,

path: web::Path<(i32,)>,

) -> Result<HttpResponse, Error> {

let conn = pool.get()?;

let id = path.0;

let todo = Todo::find(&conn, id).ok_or_else(|| error::ErrorNotFound(format!("Todo {} not found", id)))?;

Ok(HttpResponse::Ok().json(todo))

}In this example, we use the Todo::find method to retrieve a single todo by its ID from the database. If the todo doesn’t exist, we return a 404 Not Found status code with an error message. If everything succeeds, we return the todo as JSON with a 200 OK status code.

By returning appropriate HTTP status codes and error messages, we can help clients understand what went wrong with their requests and take appropriate action.

Serializing and deserializing data using JSON and other formats

Serialization is the process of converting data structures or objects into a format that can be stored or transmitted over a network. Deserialization is the reverse process, where the serialized data is converted back into its original form.

Actix Web supports serialization and deserialization of data using various formats, including JSON, XML, and MsgPack.

To use JSON serialization in Actix Web, we need to enable the serde and actix-web crates in our Cargo.toml file. Then, we can use the Json helper type provided by Actix Web to deserialize JSON data into Rust structs, and to serialize Rust structs into JSON data.

Here’s an example of deserializing a JSON request body into a Rust struct:

use serde::{Deserialize, Serialize};

#[derive(Debug, Deserialize)]

struct CreateUser {

name: String,

email: String,

password: String,

}

async fn create_user(info: web::Json<CreateUser>) -> impl Responder {

println!("{:?}", info);

// ... create user logic ...

}In the above code, we define a CreateUser struct with name, email, and password fields. We use the serde crate’s Deserialize trait to specify how to deserialize JSON data into this struct.

We then define an async function called create_user that takes a web::Json<CreateUser> parameter. The web::Json type is a helper type provided by Actix Web that automatically deserializes the request body into a CreateUser struct.

We can also serialize Rust structs into JSON using the Json type:

use serde::{Deserialize, Serialize};

#[derive(Debug, Serialize)]

struct User {

id: u32,

name: String,

email: String,

}

async fn get_user(id: web::Path<u32>) -> impl Responder {

let user = User {

id: id.into_inner(),

name: "John Doe".to_string(),

email: "johndoe@example.com".to_string(),

};

web::Json(user)

}In the above code, we define a User struct with id, name, and email fields. We use the serde crate’s Serialize trait to specify how to serialize this struct into JSON.

We then define an async function called get_user that takes a web::Path<u32> parameter representing the user ID. Inside the function, we create a User struct with dummy data, and then return a web::Json response containing the serialized User struct.

Actix Web also supports serialization and deserialization of other formats, such as XML and MsgPack, using the actix-web-httpmessage and serde-xml-rs crates, and the actix-web-msgpack and rmp-serde crates, respectively.

Validating and sanitizing input data

Validating and sanitizing input data is a critical aspect of building a robust and secure web application. Actix Web provides several tools to validate and sanitize input data before processing it.

One of the most commonly used validation tools in Actix Web is the validator crate. This crate provides a set of validation functions that can be used to validate input data. For example, we can use the is_email function to validate an email address:

use validator::validate_email;

fn validate_email_address(email: &str) -> bool {

validate_email(email).is_ok()

}We can also use the sanitize_email function to sanitize an email address:

use validator::sanitize::sanitize_email;

fn sanitize_email_address(email: &str) -> String {

sanitize_email(email)

}Actix Web also provides built-in support for validating JSON input using the serde_json crate. We can use the #[derive(Deserialize, Serialize)] attribute to automatically generate a JSON serializer and deserializer for our struct:

use serde::{Deserialize, Serialize};

#[derive(Deserialize, Serialize)]

struct Todo {

title: String,

completed: bool,

}We can then use the Json extractor to automatically deserialize the request body into our Todo struct:

use actix_web::{web, App, HttpResponse, Responder};

async fn create_todo(todo: web::Json<Todo>) -> impl Responder {

// process the todo

HttpResponse::Ok()

}Actix Web also provides built-in support for validating query parameters and path parameters using the web::Path and web::Query extractors. For example, we can use the web::Path extractor to extract a path parameter and validate it using the validator crate:

use actix_web::{web, App, HttpResponse, Responder};

use validator::Validate;

#[derive(Validate)]

struct PathParams {

#[validate(length(min = 3, max = 10))]

id: String,

}

async fn get_todo_by_id(path_params: web::Path<PathParams>) -> impl Responder {

// process the request

HttpResponse::Ok()

}In the example above, we use the #[derive(Validate)] attribute to automatically generate validation code for our PathParams struct. We then use the web::Path extractor to extract the path parameter and validate it using the validator crate.

Implementing pagination, filtering, and sorting in your API

Pagination, filtering, and sorting are important features that are often required in RESTful APIs. In Actix Web, we can implement these features by parsing the query string parameters and using them to modify the response returned by our handlers.

Pagination

Pagination is used to limit the number of results returned by an API and to allow clients to navigate through large result sets. To implement pagination, we need to add two query string parameters to our API: page and limit. The page parameter specifies the page number of the result set, while the limit parameter specifies the maximum number of results per page.

Here’s an example of how to implement pagination in Actix Web:

async fn get_users(

web::Query(pagination): web::Query<PaginationParams>,

// Other parameters

) -> impl Responder {

let page = pagination.page.unwrap_or(1);

let limit = pagination.limit.unwrap_or(10);

let offset = (page - 1) * limit;

let users = get_users_from_database(offset, limit);

// Return paginated response

}In the example above, we parse the page and limit parameters using a custom PaginationParams struct, and calculate the offset for the database query. We then fetch the users from the database using the offset and limit, and return a paginated response.

Filtering

Filtering is used to retrieve a subset of data that matches a specific set of criteria. In Actix Web, we can implement filtering by adding query string parameters to our API that correspond to the fields we want to filter on.

Here’s an example of how to implement filtering in Actix Web:

async fn get_users(

web::Query(filter): web::Query<UserFilter>,

// Other parameters

) -> impl Responder {

let users = get_users_from_database_filtered(&filter);

// Return filtered response

}In the example above, we parse the filter query string parameter using a custom UserFilter struct, and use it to fetch the users from the database that match the filter criteria.

Sorting

Sorting is used to order the results of an API by one or more fields. In Actix Web, we can implement sorting by adding query string parameters to our API that correspond to the fields we want to sort on.

Here’s an example of how to implement sorting in Actix Web:

async fn get_users(

web::Query(sort): web::Query<UserSort>,

// Other parameters

) -> impl Responder {

let users = get_users_from_database_sorted(&sort);

// Return sorted response

}In the example above, we parse the sort query string parameter using a custom UserSort struct, and use it to fetch the users from the database sorted by the specified fields.

Caching responses to improve performance

Caching responses is a technique used to improve the performance of web applications by reducing the number of requests that need to be made to the server. By caching frequently accessed data, you can reduce the amount of time it takes to serve the data to the client.

In Actix Web, you can use a middleware called HttpCache to implement caching for your responses. This middleware uses the HTTP Cache-Control header to determine how long a response should be cached for.

To use HttpCache, you first need to add it as middleware to your application:

use actix_web::middleware::HttpCache;

let app = App::new()

.wrap(HttpCache::new())

.service(/* your routes */);This will enable caching for all responses that do not have an explicit Cache-Control header.

To specify a cache time for a specific response, you can use the Cache-Control header:

use actix_web::{HttpResponse, http::header};

fn my_handler() -> HttpResponse {

let response = /* create your response */;

// Cache the response for 5 minutes

response.header(header::CACHE_CONTROL, "max-age=300");

response

}This will cache the response for 5 minutes.

Note that caching can also be implemented at the server level using tools like Varnish or Nginx. However, using a middleware like HttpCache can give you more fine-grained control over caching for individual responses in your application.

Securing your API with HTTPS and SSL/TLS

Securing your API with HTTPS and SSL/TLS is crucial for protecting your users’ data and preventing unauthorized access to your API. HTTPS is an extension of the HTTP protocol that uses encryption to protect the communication between the client and the server. SSL/TLS is the protocol used to establish a secure connection over HTTPS.

In Actix Web, you can use the openssl crate to enable SSL/TLS support in your application. Here’s an example of how to use openssl to enable SSL/TLS:

use openssl::ssl::{SslAcceptor, SslFiletype, SslMethod};

let mut builder = SslAcceptor::mozilla_intermediate(SslMethod::tls()).unwrap();

builder.set_private_key_file("/path/to/private_key.pem", SslFiletype::PEM).unwrap();

builder.set_certificate_chain_file("/path/to/certificate_chain.pem").unwrap();

let app = App::new()

.service(/* your routes */);

let server = HttpServer::new(app)

.bind_openssl("0.0.0.0:8443", builder)

.unwrap();In this example, we create an SslAcceptor using the mozilla_intermediate method to use a secure SSL/TLS configuration. We then set the paths to our private key and certificate chain files. Finally, we create an HttpServer and bind it to port 8443 using SSL/TLS.

Once you have enabled SSL/TLS in your application, you can use HTTPS URLs to access your API. For example, https://example.com/api/v1.

It’s important to note that you will need to obtain a valid SSL/TLS certificate from a trusted Certificate Authority (CA) to enable HTTPS in your application. There are many CAs that offer SSL/TLS certificates, and some hosting providers may offer them as part of their services.

Implementing rate limiting and throttling to prevent abuse

Implementing rate limiting and throttling in your API is an important way to prevent abuse and ensure that your API is performing optimally. Rate limiting and throttling can help prevent denial of service attacks, protect your resources, and ensure a good user experience for your users.

In Actix Web, you can use the ratelimit crate to implement rate limiting and throttling in your API. Here’s an example of how to use ratelimit to limit the number of requests per minute from a specific IP address:

use actix_web::{Error, HttpResponse, Result};

use ratelimit::{MemoryStore, RateLimiter};

async fn index(rate_limiter: web::Data<RateLimiter<MemoryStore>>) -> Result<HttpResponse, Error> {

let client_ip = "127.0.0.1".parse().unwrap();

let rate_limit_key = format!("ip:{}", client_ip);

if rate_limiter.check(&rate_limit_key, 60, 1).is_err() {

return Err(Error::TooManyRequests());

}

// Process the request

Ok(HttpResponse::Ok().finish())

}

fn main() {

let rate_limiter = RateLimiter::<MemoryStore>::new(100, Duration::from_secs(60));

let rate_limiter = web::Data::new(rate_limiter);

let app = App::new()

.service(web::resource("/").to(index))

.app_data(rate_limiter);

HttpServer::new(app)

.bind("127.0.0.1:8080")

.unwrap()

.run()

.unwrap();

}In this example, we create a RateLimiter using the MemoryStore backend to store rate limiting information in memory. We then define an index function that takes a Data<RateLimiter<MemoryStore>> parameter to access the rate limiter. Inside the index function, we check the rate limit for the client IP address, allowing only one request per minute. If the rate limit is exceeded, we return a TooManyRequests error to the client.

Finally, we create an App with the index route and the rate_limiter data, and we bind the HttpServer to port 8080.

This is just a simple example of how to use ratelimit in Actix Web. There are many other ways to configure and use ratelimit, such as using a Redis backend, customizing the rate limit window and interval, and applying different rate limits to different routes or users.

Versioning your API to ensure backward compatibility

API versioning is an important aspect of developing and maintaining an API. It ensures backward compatibility and provides a way to add new features or make changes without breaking existing client applications.

There are different approaches to versioning an API, but one common approach is to include the version number in the URL. For example, instead of using /todos, we can use /v1/todos for the first version of our API. If we need to make breaking changes, we can create a new version of the API and use a different URL, such as /v2/todos.

Another approach is to include the version number in the request headers. This allows clients to specify which version of the API they want to use. For example, we can use the Accept header to specify the version:

Accept: application/vnd.myapi.v1+jsonThis tells the server to return a response in the JSON format for version 1 of the API. If we want to use a different version, we can change the version number in the header.

Regardless of the approach, it’s important to document the API versioning scheme and provide clear guidelines for clients to follow. This helps avoid confusion and ensures that clients can use the API effectively.

Building a client library for your API in Rust

Building a client library for your API in Rust can be a great way to simplify integration with your API and provide a more seamless experience for your users. Here are some general steps you can follow to build a client library:

-

Define the API endpoints: Start by defining the API endpoints that your client library will interact with. This can include HTTP methods, endpoint URLs, and request and response data structures.

-

Choose a HTTP client library: Choose a HTTP client library that fits your needs. Popular options in the Rust ecosystem include reqwest, hyper, and surf.

-

Define the client library interface: Define an interface for the client library that makes it easy for developers to use. This can include functions for each API endpoint, as well as helper functions for authentication, pagination, error handling, and other common tasks.

-

Implement the API endpoints: Implement the API endpoints using the HTTP client library. Make sure to handle errors, parse response data, and handle any necessary serialization and deserialization.

-

Test the client library: Test the client library to ensure that it works as expected. Write unit tests for each API endpoint, as well as integration tests that cover common use cases.

-

Publish the client library: Publish the client library to a package registry such as crates.io so that other developers can use it.

By following these steps, you can create a powerful client library that makes it easy for developers to interact with your API in Rust.

Chapter 3: Building Real-Time Applications with Actix Web and WebSockets

Introduction to WebSockets

WebSockets are a protocol for two-way communication between a client and a server over a single TCP connection. Unlike traditional HTTP requests, WebSockets allow the server to push data to the client without the client having to request it.

WebSockets are often used in real-time web applications such as chat rooms, online gaming, and financial trading platforms. They are supported by most modern web browsers and can be implemented using a variety of programming languages and frameworks.

In a WebSocket connection, the client and server start with an HTTP handshake, during which the client sends a special HTTP request containing an Upgrade header indicating that it wants to establish a WebSocket connection. If the server agrees to the upgrade, it responds with a special HTTP response that indicates that the WebSocket connection has been established.

After the handshake, both the client and server can send messages to each other at any time using a simple binary or text-based protocol. The messages can be arbitrarily large, and can contain any data that can be serialized to binary or text format.

WebSockets can be implemented using low-level APIs or high-level frameworks. In Rust, Actix Web provides a high-level WebSocket API that makes it easy to implement real-time features in web applications.

Creating a real-time chat application

Creating a real-time chat application using WebSockets can be a great way to explore the power of this protocol. In Rust, Actix Web provides a high-level WebSocket API that makes it easy to implement real-time features in web applications.

Here is an example of how you can create a real-time chat application using Actix Web and WebSockets:

-

Start by creating a new Actix Web project using

cargo new <project_name> --bin. -

Add the following dependencies to your

Cargo.tomlfile:actix-web = "3.2" actix-rt = "2.0" serde = { version = "1.0", features = ["derive"] } serde_json = "1.0"The

serdeandserde_jsondependencies will be used to serialize and deserialize messages in JSON format. -

In your

main.rsfile, add the following code to set up a WebSocket endpoint:use actix_web::{web, App, HttpRequest, HttpResponse, HttpServer, Responder}; use actix_web_actors::ws; use serde::{Deserialize, Serialize}; use std::collections::HashMap; #[derive(Debug, Deserialize, Serialize)] struct ChatMessage { username: String, message: String, } struct ChatSession { id: usize, username: String, room: String, clients: HashMap<usize, ws::WebSocketWriter>, } impl ChatSession { fn new(id: usize, username: String, room: String) -> Self { ChatSession { id, username, room, clients: HashMap::new(), } } fn add_client(&mut self, client: ws::WebSocketWriter) { self.clients.insert(self.id, client); } fn remove_client(&mut self, id: usize) { self.clients.remove(&id); } fn broadcast_message(&mut self, message: &str) { for client in self.clients.values_mut() { client.send(message); } } } async fn chat_route(req: HttpRequest, stream: web::Payload) -> impl Responder { let session = ChatSession::new(0, "anonymous".to_owned(), "general".to_owned()); ws::start( session, &req, stream, |session: &mut ChatSession, msg: Result<ws::Message, ws::ProtocolError>| { match msg { Ok(ws::Message::Ping(msg)) => { session.broadcast_message(&msg.to_string()); } Ok(ws::Message::Text(text)) => { let message: ChatMessage = serde_json::from_str(&text).unwrap(); session.broadcast_message(&text); } _ => {} } Ok(()) }, ) } #[actix_web::main] async fn main() -> std::io::Result<()> { HttpServer::new(|| { App::new() .route("/chat", web::get().to(chat_route)) }) .bind("127.0.0.1:8080")? .run() .await } -

In the above code, we define a

ChatMessagestruct to represent messages sent by clients. We also define aChatSessionstruct to represent a chat session, which consists of a unique ID, a username, a room name, and a map of clients connected to the session. -

In the

chat_routefunction, we create a newChatSessionstruct for each WebSocket connection and add it to the list of sessions. We also send a welcome message to the client.

async fn chat_route(req: HttpRequest, stream: web::Payload) -> Result<HttpResponse, Error> {

let resp = ws::start(

ChatSession {

id: 0,

name: None,

room: "general".to_owned(),

addr: None,

sessions: Arc::new(Mutex::new(Vec::new())),

},

&req,

stream,

);

if let Ok(ref resp) = resp {

// Add new session

let mut sessions = SESSIONS.lock().await;

sessions.push(resp.get_inner().clone());

}

resp

}- In the

ChatSessionstruct, we handle incoming WebSocket messages in thehandle_messagemethod. We parse the message as a string and check if it starts with the/nickcommand, which is used to set the user’s nickname. If it does, we update thenamefield of the session.

impl Actor for ChatSession {

type Context = ws::WebsocketContext<Self>;

fn started(&mut self, ctx: &mut Self::Context) {

// Add session to list of sessions

self.addr = Some(ctx.address());

let mut sessions = self.sessions.lock().unwrap();

sessions.push(ctx.address().recipient());

}

}

impl StreamHandler<Result<ws::Message, ws::ProtocolError>> for ChatSession {

fn handle(&mut self, msg: Result<ws::Message, ws::ProtocolError>, ctx: &mut Self::Context) {

match msg {

Ok(ws::Message::Text(text)) => {

// Handle text message

if text.starts_with("/nick") {

// Set nickname

let nickname = text.splitn(2, ' ').nth(1).unwrap_or("");

self.name = Some(nickname.to_owned());

ctx.text(format!("Nickname set to {}", nickname));

} else {

// Broadcast message to all sessions in the same room

let sessions = self.sessions.lock().unwrap();

for session in &*sessions {

if let Some(addr) = session.get() {

addr.do_send(ChatMessage {

from: self.name.clone(),

content: text.clone(),

room: self.room.clone(),

}).unwrap();

}

}

}

}

// Handle other message types

_ => {}

}

}

}- Finally, we define a

ChatMessagestruct to represent a message sent by a client, and aChatEventenum to represent various events that can occur in the chat, such as a new client joining or leaving the chat or a new message being sent.

#[derive(Message)]

#[rtype(result = "()")]

struct ChatMessage {

from: Option<String>,

content: String,

room: String,

}

#[derive(Message)]

#[rtype(result = "()")]

enum ChatEvent {

Join(String, Recipient<ChatMessage>),

Leave(String),

Message(ChatMessage),

}With these components in place, we now have the basic structure of a real-time chat application using WebSockets in Actix Web. We can start the application with cargo run and navigate to http://localhost:8080 in a web browser to open the chat interface. Multiple users can connect to the chat and send messages in real time.

Broadcasting messages to multiple clients

To broadcast messages to multiple clients in a chat session, we can maintain a list of all connected clients in the session and send the message to each client.

We can add a Vec field to the ChatSession struct to store a list of connected clients:

struct ChatSession {

id: usize,

name: String,

clients: Vec<mpsc::UnboundedSender<String>>,

...

}When a new client connects to the session, we can add its sender to the list:

async fn chat_route(

req: HttpRequest,

stream: web::Payload,

srv: web::Data<Arc<ChatServer>>,

) -> Result<HttpResponse, Error> {

...

let (tx, rx) = mpsc::unbounded_channel();

let id = session.next_id().await;

session.clients.push(tx);

let resp = ws::start(ChatSession {

id,

name: String::new(),

clients: session.clients.clone(),

rx,

}, &req, stream)?;

Ok(resp)

}Note that we use clone() to create a new vector containing all connected clients, so that each session has its own list of clients.

When a client sends a message, we can broadcast it to all other clients in the session:

impl Actor for ChatSession {

type Context = ws::WebsocketContext<Self>;

fn started(&mut self, ctx: &mut Self::Context) {

...

}

fn stopped(&mut self, ctx: &mut Self::Context) {

...

}

}

impl StreamHandler<Result<ws::Message, ws::ProtocolError>> for ChatSession {

fn handle(&mut self, msg: Result<ws::Message, ws::ProtocolError>, ctx: &mut Self::Context) {

match msg {

Ok(ws::Message::Text(text)) => {

for tx in &self.clients {

let _ = tx.send(text.clone());

}

}

...

}

}

}In the handle() method, we iterate over the list of clients and send a copy of the message to each client using its sender. We use clone() again to create a new copy of the message, since tx.send() takes ownership of the message.

With this implementation, all clients connected to a chat session will receive messages sent by any other client.

Handling WebSocket errors and disconnections

Handling WebSocket errors and disconnections is an important part of building a robust real-time application. When a WebSocket connection is closed due to an error or by the client, we need to be able to handle that event gracefully and clean up any resources that were being used by the session.

In Actix Web, we can handle WebSocket errors and disconnections by implementing the StreamHandler trait for our session type. This trait defines a number of methods that we can override to handle different types of events that can occur on a WebSocket stream.

For example, the handle_error method is called when an error occurs on the WebSocket stream. We can use this method to log the error and take any appropriate action, such as closing the connection:

use actix::prelude::*;

use actix_web_actors::ws;

struct ChatSession {

// ...

}

impl Actor for ChatSession {

type Context = ws::WebsocketContext<Self>;

// ...

}

impl StreamHandler<Result<ws::Message, ws::ProtocolError>> for ChatSession {

fn handle(&mut self, msg: Result<ws::Message, ws::ProtocolError>, ctx: &mut Self::Context) {

// Handle incoming messages here

}

fn handle_error(&mut self, err: ws::ProtocolError, ctx: &mut Self::Context) {

// Log the error

error!("WebSocket error: {:?}", err);

// Close the connection

ctx.close(None);

}

fn on_shutdown(&mut self, ctx: &mut Self::Context) {

// Clean up any resources being used by the session

}

}In addition to the handle_error method, the StreamHandler trait also defines methods for handling other types of events, such as the on_shutdown method, which is called when the WebSocket stream is closed.

By implementing these methods, we can ensure that our WebSocket sessions are robust and handle errors and disconnections gracefully.

Integrating WebSockets with Actix Web’s routing and middleware

Integrating WebSockets with Actix Web’s routing and middleware involves adding WebSocket support to an existing Actix Web application. This can be done by adding a new route to handle WebSocket connections and configuring any necessary middleware.

To add WebSocket support to an Actix Web application, first create a new route that handles WebSocket connections. This can be done using the route method, which takes a path and a closure that returns an HttpResponse object or an impl Responder object. In the closure, we can use the ws method to create a new WebSocket route:

use actix_web::{web, HttpResponse};

use actix_web_actors::ws;

fn websocket_route(r: &mut web::ServiceConfig) {

r.route("/ws/", web::get().to(ws_index));

}

fn ws_index(req: HttpRequest, stream: web::Payload) -> impl Responder {

let res = ws::start(ChatSession::new(), &req, stream);

println!("WebSocket connection started");

res

}In this example, we create a new WebSocket route at the path /ws/ that calls the ws_index function. The ws::start method is used to start a new WebSocket connection using the ChatSession struct we defined earlier.

Next, we need to add any necessary middleware for WebSocket connections. This can be done using the wrap method, which takes a closure that returns a middleware object. For example, to add a middleware that logs all incoming WebSocket messages, we could define a middleware function like this:

use actix_web::{HttpRequest, web, Error};

use actix_web_actors::ws;

async fn logging_middleware(

req: HttpRequest,

ws: web::Payload,

srv: web::Data<Addr<ChatSession>>,

) -> Result<HttpResponse, Error> {

let res = ws::start(ChatSession::new(), &req, ws)?;

println!("WebSocket connection started");

Ok(res)

}In this example, we define a middleware function that takes an incoming HttpRequest, a Payload object, and a Data object containing the Addr of the ChatSession. We then use the ws::start method to start a new WebSocket connection with the ChatSession, and log a message when the connection is started.

Finally, we need to add the middleware to our Actix Web application using the wrap method:

let app = App::new()

.data(addr.clone())

.wrap(middleware::Logger::default())

.wrap_fn(|req, srv| {

let srv = srv.clone();

logging_middleware(req, srv)

})

.service(websocket_route);In this example, we add the Logger middleware to log all incoming requests, and add the logging_middleware middleware to handle incoming WebSocket connections. We then add our websocket_route to the Actix Web application using the service method.

Scaling your WebSocket server with multiple workers and load balancing

To scale our WebSocket server with multiple workers and load balancing, we can use a combination of process-based parallelism and load balancing techniques.

First, we can use Actix Web’s built-in support for multiple workers to run multiple instances of our application in parallel. This can be done by setting the --workers option when starting our application with actix-web command.

For example, to run our WebSocket server with four worker processes, we can use the following command:

$ actix-web run --workers 4Each worker process will run a separate instance of our application, and incoming connections will be distributed across these instances by the operating system’s networking stack.

However, to further improve scalability and reliability, we can also use a load balancer to distribute incoming connections across multiple servers running our application.

One popular load balancing solution is Nginx, which can be configured to distribute WebSocket connections across multiple backend servers using the proxy_pass directive.

For example, to load balance WebSocket connections across two backend servers running our application on ports 8080 and 8081, we can use the following configuration:

http {

upstream websocket {

server localhost:8080;

server localhost:8081;

}

server {

listen 80;

location /chat {

proxy_pass http://websocket;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

}

}

}This configuration sets up a reverse proxy for WebSocket connections on the /chat path, which distributes connections across the two backend servers using the websocket upstream.

By using process-based parallelism and load balancing techniques, we can ensure that our WebSocket server can handle a large number of concurrent connections and provide a scalable and reliable real-time communication solution.

Implementing custom WebSocket protocols and message formats

Actix Web provides support for the WebSocket protocol through the actix-web-socket crate, which allows you to create WebSocket servers that can handle custom protocols and message formats.

To implement a custom WebSocket protocol, you can define a new type that implements the WebSocketProtocol trait. This trait defines methods for parsing incoming WebSocket messages and generating outgoing messages.

Here’s an example of how you can define a custom WebSocket protocol:

use actix_web::web::Bytes;

use actix_web_socket::{Message, WebSocketProtocol};

struct MyProtocol;

impl WebSocketProtocol for MyProtocol {

fn on_message(&mut self, msg: Message) -> Option<Message> {

// Parse incoming message

let bytes = match msg {