Chapter 1: The Birth of AI

Introduction to the concept of artificial intelligence

Artificial intelligence (AI) is a branch of computer science that deals with the creation of intelligent agents, which are systems that can reason, learn, and act autonomously. AI research has been highly successful in developing effective techniques for solving a wide range of problems, from game playing to medical diagnosis.

One of the most important concepts in AI is the idea of machine learning. Machine learning is a type of AI that allows systems to learn from data without being explicitly programmed. This is done by training the system on a large dataset of examples, and then allowing the system to generalize to new examples.

Another important concept in AI is the idea of natural language processing (NLP). NLP is a field of AI that deals with the interaction between computers and human language. NLP systems can be used to perform a variety of tasks, such as text translation, speech recognition, and question answering.

AI is a rapidly evolving field, and there are many exciting new developments on the horizon. For example, deep learning is a new type of machine learning that is based on artificial neural networks. Deep learning has been very successful in a number of areas, including image recognition, speech recognition, and natural language processing.

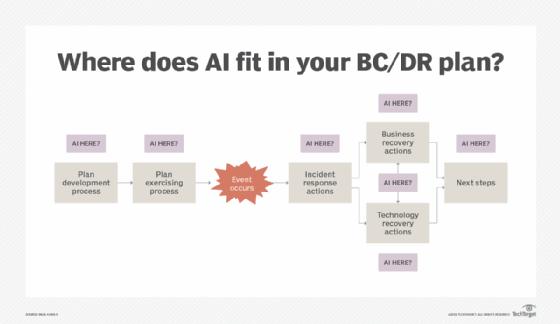

As AI continues to evolve, it is likely to have a profound impact on our lives. AI systems are already being used in a variety of industries, and their use is only going to increase in the future. AI has the potential to revolutionize many aspects of our lives, from the way we work to the way we interact with the world around us.

Here are some of the ways that AI is being used today:

- Self-driving cars: AI is being used to develop self-driving cars that can navigate roads and avoid obstacles without human input.

- Medical diagnosis: AI is being used to develop systems that can help doctors diagnose diseases more accurately.

- Customer service: AI is being used to develop chatbots that can answer customer questions and resolve issues without the need for human intervention.

- Fraud detection: AI is being used to develop systems that can detect fraudulent activity, such as credit card fraud and insurance fraud.

These are just a few examples of the many ways that AI is being used today. As AI continues to evolve, it is likely to have an even greater impact on our lives.

Alan Turing and the foundations of AI

Alan Turing was a British mathematician and computer scientist who is widely considered to be the father of artificial intelligence (AI). In 1936, he published a paper titled “On Computable Numbers, with an Application to the Entscheidungsproblem” in which he introduced the Turing machine, a theoretical model of computation that is still used today as a benchmark for measuring the computational power of computers.

Turing also proposed the Turing test, a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. The Turing test is still used today as a benchmark for measuring the progress of AI research.

Turing’s work laid the foundations for the field of AI, and his ideas continue to inspire and guide AI researchers today. He is a true pioneer in the field of AI, and his work has had a profound impact on the way we think about intelligence and machines.

Here are some of the key contributions that Alan Turing made to the field of AI:

- He proposed the Turing machine, a theoretical model of computation that is still used today as a benchmark for measuring the computational power of computers.

- He proposed the Turing test, a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

- He developed the theory of computation, which is the foundation for modern computer science.

- He worked on the development of the Colossus, a computer that was used to break the German Enigma code during World War II.

- He was a pioneer in the field of artificial life, which is the study of the creation of artificial organisms.

Turing’s work has had a profound impact on the field of AI, and he is considered to be one of the most important figures in the history of computer science. His work has helped to shape the way we think about intelligence and machines, and it has inspired and guided AI researchers for decades.

The Dartmouth Conference and the birth of AI as a field

The Dartmouth Summer Research Project on Artificial Intelligence, held in Hanover, New Hampshire, in the summer of 1956, is widely considered to be the founding event of the field of artificial intelligence (AI). The conference was organized by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester, and it brought together a group of leading scientists and engineers to discuss the possibility of creating machines that could think.

The conference was a watershed moment for AI research. The participants agreed that AI was a feasible goal, and they laid out a research agenda for the field. They also coined the term “artificial intelligence” to describe their work.

The Dartmouth Conference was followed by a period of rapid growth in AI research. In the 1960s, AI researchers made significant progress in developing new techniques for machine learning, natural language processing, and expert systems. However, in the 1970s, AI research hit a roadblock. Many of the early AI systems were not as successful as had been hoped, and funding for AI research dried up. This period of stagnation in AI research is known as the “AI winter.”

In the 1980s, AI research began to recover. New techniques for machine learning, such as neural networks, led to the development of more successful AI systems. In the 1990s, the rise of the internet and the availability of large datasets led to a new wave of progress in AI research.

Today, AI is a thriving field of research. AI systems are being used in a wide range of applications, including healthcare, finance, and transportation. AI is also being used to develop new technologies, such as self-driving cars and virtual assistants.

The Dartmouth Conference was a seminal event in the history of AI. It marked the beginning of a long and winding road that has led to the development of the AI systems that we use today. The conference also helped to shape the way we think about intelligence and machines. The participants at the conference believed that machines could be made to think, and they laid the foundation for the field of AI research.

Early AI pioneers and their contributions

There are many early AI pioneers who made significant contributions to the field. Here are a few of the most notable:

- Alan Turing: Turing is widely considered to be the father of AI. He is best known for his work on the Turing machine, a theoretical model of computation that is still used today as a benchmark for measuring the computational power of computers. He also proposed the Turing test, a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

- John McCarthy: McCarthy is another of the founding fathers of AI. He coined the term “artificial intelligence” and developed the Lisp programming language, which is still used today for AI research. He also founded the Stanford Artificial Intelligence Laboratory, one of the leading AI research labs in the world.

- Marvin Minsky: Minsky was a student of McCarthy and one of the leading AI researchers of the 20th century. He is best known for his work on neural networks and expert systems. He also co-founded the MIT Artificial Intelligence Laboratory, another leading AI research lab in the world.

- Claude Shannon: Shannon was a mathematician and engineer who made significant contributions to the field of information theory. His work on information theory laid the foundation for the development of machine learning and artificial intelligence.

- Herbert Simon: Simon was a psychologist and economist who made significant contributions to the field of artificial intelligence. He is best known for his work on heuristic search and rule-based systems. He also won the Nobel Prize in Economics in 1978 for his work on decision-making.

These are just a few of the many early AI pioneers who made significant contributions to the field. Their work laid the foundation for the development of the AI systems that we use today.

The development of the perceptron and early neural networks

The perceptron is a type of artificial neuron that was first proposed by Frank Rosenblatt in 1957. The perceptron is a simple model of a biological neuron, and it is able to learn to classify input data into two categories.

The perceptron is made up of three parts:

- Input: The input layer is where the perceptron receives its input data.

- Threshold: The threshold is a value that the perceptron must exceed in order to output a 1.

- Output: The output layer is where the perceptron outputs its decision.

The perceptron works by calculating the weighted sum of its inputs and comparing it to the threshold. If the weighted sum is greater than or equal to the threshold, the perceptron outputs a 1. Otherwise, it outputs a 0.

Perceptrons can be connected together to form neural networks. Neural networks are able to learn to classify input data into more than two categories.

The first neural network was developed by Marvin Minsky and Seymour Papert in 1969. The neural network was called the perceptron, and it was able to learn to classify handwritten digits.

Neural networks were not very successful at first. They were not able to learn to solve many problems, and they were very computationally expensive.

In the 1980s, there was a resurgence of interest in neural networks. This was due to the development of new learning algorithms and the availability of faster computers.

Neural networks have been very successful at solving a wide range of problems, including image recognition, speech recognition, and natural language processing.

Neural networks are still a very active area of research. There is a lot of work being done to improve the performance of neural networks and to make them more efficient.

Here are some of the benefits of using perceptrons and neural networks:

- They are able to learn from data: Perceptrons and neural networks can learn to classify input data without being explicitly programmed. This is done by training the network on a large dataset of examples.

- They are able to solve complex problems: Perceptrons and neural networks can be used to solve complex problems that would be difficult or impossible to solve using traditional programming techniques.

- They are becoming more efficient: Perceptrons and neural networks are becoming more efficient as new hardware and software are developed. This is making them more practical for use in real-world applications.

Here are some of the challenges of using perceptrons and neural networks:

- They can be difficult to train: Perceptrons and neural networks can be difficult to train. This is because they can be sensitive to the data that they are trained on.

- They can be computationally expensive: Perceptrons and neural networks can be computationally expensive to train and to use. This is especially true for large neural networks.

- They can be difficult to interpret: The decisions made by perceptrons and neural networks can be difficult to interpret. This is because they are based on complex mathematical models.

Overall, perceptrons and neural networks are powerful tools that can be used to solve a wide range of problems. However, they also have some challenges that need to be addressed.

The role of symbolic AI and the logic-based approach

Symbolic AI is a subfield of artificial intelligence that uses symbolic representations of knowledge to reason about the world. Symbolic representations are used to represent objects, concepts, and relationships between them. Symbolic AI systems use logic-based reasoning to make inferences and draw conclusions from the knowledge that they have been given.

The logic-based approach to AI is based on the idea that intelligence can be explained in terms of logic and reasoning. Logic is a formal system for representing knowledge and reasoning about it. Reasoning is the process of using logic to draw conclusions from knowledge.

The logic-based approach to AI has been successful in solving a number of problems, including:

- Propositional logic: Propositional logic is a simple form of logic that can be used to represent knowledge about the world. Propositional logic systems can be used to solve problems such as logic puzzles and game playing.

- First-order logic: First-order logic is a more powerful form of logic that can be used to represent knowledge about the world. First-order logic systems can be used to solve problems such as natural language processing and theorem proving.

- Logic programming: Logic programming is a programming paradigm that is based on logic. Logic programming languages such as Prolog can be used to write programs that reason about the world.

The logic-based approach to AI has some limitations. One limitation is that it can be difficult to represent knowledge in a symbolic form. Another limitation is that logic-based reasoning systems can be slow and inefficient.

Despite these limitations, the logic-based approach to AI has been successful in solving a number of problems. It is a promising approach to AI, and it is likely to continue to be used to solve new problems in the future.

Here are some of the benefits of using symbolic AI and the logic-based approach:

- They are able to represent knowledge explicitly: Symbolic AI systems can represent knowledge explicitly in the form of symbols. This makes it easier for humans to understand and work with the knowledge.

- They are able to reason logically: Symbolic AI systems can reason logically about the knowledge that they have been given. This allows them to solve problems that would be difficult or impossible to solve using other approaches.

- They are able to learn from experience: Symbolic AI systems can learn from experience by being given examples of how to solve problems. This allows them to improve their performance over time.

Here are some of the challenges of using symbolic AI and the logic-based approach:

- They can be difficult to build: Symbolic AI systems can be difficult to build because they require a deep understanding of the problem that they are trying to solve.

- They can be difficult to scale: Symbolic AI systems can be difficult to scale to large problems because they require a lot of memory and processing power.

- They can be brittle: Symbolic AI systems can be brittle, meaning that they can be easily fooled by small changes in the input data.

Overall, symbolic AI and the logic-based approach are powerful tools that can be used to solve a wide range of problems. However, they also have some challenges that need to be addressed.

The limitations of early AI systems and the AI winter

The early AI systems had several limitations that led to the AI winter. These limitations included:

- They were not able to generalize from examples: Early AI systems could only solve problems that they had been explicitly programmed to solve. They could not generalize to new problems that were similar to the ones they had been trained on.

- They were not able to learn from experience: Early AI systems could not learn from their mistakes. They could only improve their performance by being given new examples to solve.

- They were not able to deal with uncertainty: Early AI systems could not deal with uncertainty. They required all of the information about a problem to be known in order to solve it.

These limitations led to a loss of confidence in the field of AI. Many researchers believed that AI was not possible or that it was not possible to achieve in the near future. This led to a decrease in funding for AI research and a period of stagnation in the field.

The AI winter lasted from the mid-1970s to the mid-1980s. During this time, there was little progress in AI research. However, the field began to recover in the mid-1980s due to the development of new techniques, such as neural networks.

Neural networks are a type of machine learning algorithm that is inspired by the human brain. Neural networks can learn to solve problems without being explicitly programmed. This makes them more powerful than early AI systems.

The development of neural networks led to a resurgence of interest in AI research. The field is now thriving, and there is a lot of excitement about the potential of AI to solve real-world problems.

Despite the progress that has been made in AI research, there are still some limitations to current AI systems. These limitations include:

- They can be biased: AI systems can be biased if they are trained on data that is biased. This can lead to the systems making unfair or discriminatory decisions.

- They can be hacked: AI systems can be hacked, which could allow attackers to control the systems or steal their data.

- They can be used for malicious purposes: AI systems can be used for malicious purposes, such as spreading misinformation or propaganda.

These limitations are a challenge to the field of AI. Researchers are working to address these limitations and to develop AI systems that are safe, reliable, and fair.

The resurgence of AI in the 1980s and the rise of expert systems

In the 1980s, there was a resurgence of interest in artificial intelligence (AI). This was due to a number of factors, including the development of new techniques, such as expert systems, and the availability of more powerful computers.

Expert systems are a type of AI system that is designed to mimic the reasoning of a human expert in a particular domain. Expert systems are typically rule-based systems, meaning that they contain a set of rules that the system can use to reason about a problem.

The first expert system was developed in the early 1970s, but the field did not really take off until the 1980s. This was due to a number of factors, including the development of more powerful computers, the development of new techniques for representing knowledge, and the availability of commercial expert system shells.

Commercial expert system shells are software packages that provide a framework for developing expert systems. These shells typically provide a user interface, a knowledge base, and an inference engine.

The development of expert systems led to a resurgence of interest in AI in the 1980s. Expert systems were used in a wide variety of domains, including medicine, finance, and manufacturing.

However, the popularity of expert systems declined in the 1990s due to a number of factors, including the rise of machine learning and the increasing complexity of real-world problems.

Despite the decline in popularity of expert systems, they continue to be used in some domains, such as medicine and finance.

Here are some of the benefits of using expert systems:

- They can provide expert advice: Expert systems can provide expert advice to users in a variety of domains. This can help users to make better decisions and to solve problems more effectively.

- They can be used to automate tasks: Expert systems can be used to automate tasks that would otherwise be performed by humans. This can save time and money.

- They can be used to improve communication: Expert systems can be used to improve communication between experts and users. This can help to ensure that users are getting the best possible advice.

Here are some of the challenges of using expert systems:

- They can be expensive to develop: Expert systems can be expensive to develop, especially if they are used in a complex domain.

- They can be difficult to maintain: Expert systems can be difficult to maintain, especially if the domain that they are used in is constantly changing.

- They can be brittle: Expert systems can be brittle, meaning that they can be easily fooled by small changes in the input data.

Overall, expert systems are a powerful tool that can be used to solve a wide range of problems. However, they also have some challenges that need to be addressed.

The impact of machine learning and the birth of modern AI

Machine learning (ML) is a subset of artificial intelligence (AI) that gives computers the ability to learn without being explicitly programmed. ML algorithms are trained on data, and the data is used to teach the algorithms how to perform a task. ML has had a major impact on the field of AI, and it is one of the driving forces behind the birth of modern AI.

Here are some of the ways in which ML has impacted AI:

- ML has made AI more powerful: ML algorithms can learn to perform tasks that would be difficult or impossible for humans to program. This has made AI more powerful and has allowed it to be used in a wider range of applications.

- ML has made AI more scalable: ML algorithms can be trained on large datasets, and this has made AI more scalable. This has allowed AI to be used to solve problems that would be too large or complex for traditional AI techniques.

- ML has made AI more affordable: ML algorithms can be implemented on commodity hardware, and this has made AI more affordable. This has allowed AI to be used by a wider range of people and organizations.

The birth of modern AI is a direct result of the impact of ML. ML has made AI more powerful, scalable, and affordable, and this has allowed AI to be used to solve a wide range of problems.

Here are some of the examples of how ML is being used to solve real-world problems:

- Healthcare: ML is being used to develop new drugs and treatments, to diagnose diseases, and to personalize healthcare.

- Finance: ML is being used to predict market trends, to manage risk, and to provide financial advice.

- Manufacturing: ML is being used to improve quality control, to optimize production processes, and to design new products.

- Transportation: ML is being used to develop self-driving cars, to improve traffic management, and to optimize logistics.

- Retail: ML is being used to personalize shopping experiences, to recommend products, and to prevent fraud.

These are just a few of the ways in which ML is being used to solve real-world problems. ML is a powerful tool that has the potential to revolutionize many industries.

Here are some of the challenges that need to be addressed in order to further the development of ML:

- Data collection: ML algorithms require large datasets to train, and it can be difficult and expensive to collect this data.

- Data quality: The quality of the data used to train ML algorithms is critical, and it can be difficult to ensure that the data is accurate and complete.

- Bias: ML algorithms can be biased if they are trained on data that is biased. This can lead to the algorithms making unfair or discriminatory decisions.

- Explainability: It can be difficult to explain how ML algorithms make decisions, and this can make it difficult to trust these algorithms.

Despite these challenges, ML is a powerful tool that has the potential to revolutionize many industries. The field of ML is still in its early stages, and there is a lot of research being done to address the challenges that have been identified.

Key breakthroughs in natural language processing and computer vision

Here are some of the key breakthroughs in natural language processing (NLP) and computer vision (CV):

- Transformers: Transformers are a type of neural network that have been shown to be very effective for NLP tasks, such as machine translation, text summarization, and question answering.

- Generative pre-trained transformers: Generative pre-trained transformers are a type of transformer that has been pre-trained on a large corpus of text. This pre-training allows the transformer to learn the statistical relationships between words and phrases, which makes it better at generating text, translating languages, and answering questions.

- Self-attention: Self-attention is a mechanism that allows transformers to learn long-range dependencies between words in a sentence. This makes transformers much better at understanding the meaning of sentences than previous neural network models.

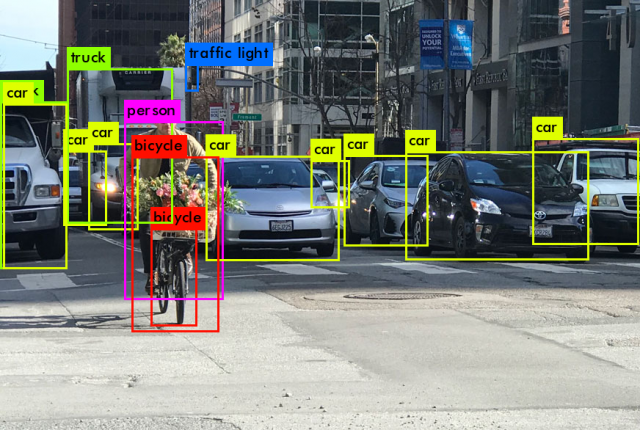

- Convolutional neural networks: Convolutional neural networks (CNNs) are a type of neural network that have been shown to be very effective for CV tasks, such as image classification, object detection, and face recognition.

- Residual networks: Residual networks (ResNets) are a type of CNN that have been shown to be very effective for deep learning tasks. ResNets are able to learn very deep models without overfitting, which makes them better at classifying images and detecting objects.

- Data augmentation: Data augmentation is a technique that is used to artificially increase the size of a training dataset. This is done by creating new data points from existing data points. Data augmentation can help to improve the performance of machine learning models by making them more robust to variations in the data.

These are just a few of the key breakthroughs that have been made in NLP and CV in recent years. These breakthroughs have led to significant improvements in the performance of NLP and CV models, which has made these technologies more widely available and useful.

Here are some of the applications of NLP and CV:

- Machine translation: NLP is used to translate text from one language to another. This is a very challenging task, but NLP models have become very good at it in recent years.

- Text summarization: NLP is used to summarize long pieces of text. This is useful for tasks such as creating news articles or generating product descriptions.

- Question answering: NLP is used to answer questions about text. This is useful for tasks such as providing customer support or helping students with their homework.

- Image classification: CV is used to classify images into different categories. This is useful for tasks such as tagging photos or detecting objects in images.

- Object detection: CV is used to detect objects in images. This is useful for tasks such as self-driving cars or facial recognition.

- Face recognition: CV is used to recognize faces. This is useful for tasks such as security or unlocking phones.

These are just a few of the many applications of NLP and CV. These technologies are becoming increasingly important in our lives, and they are likely to play an even larger role in the future.

The emergence of intelligent agents and autonomous systems

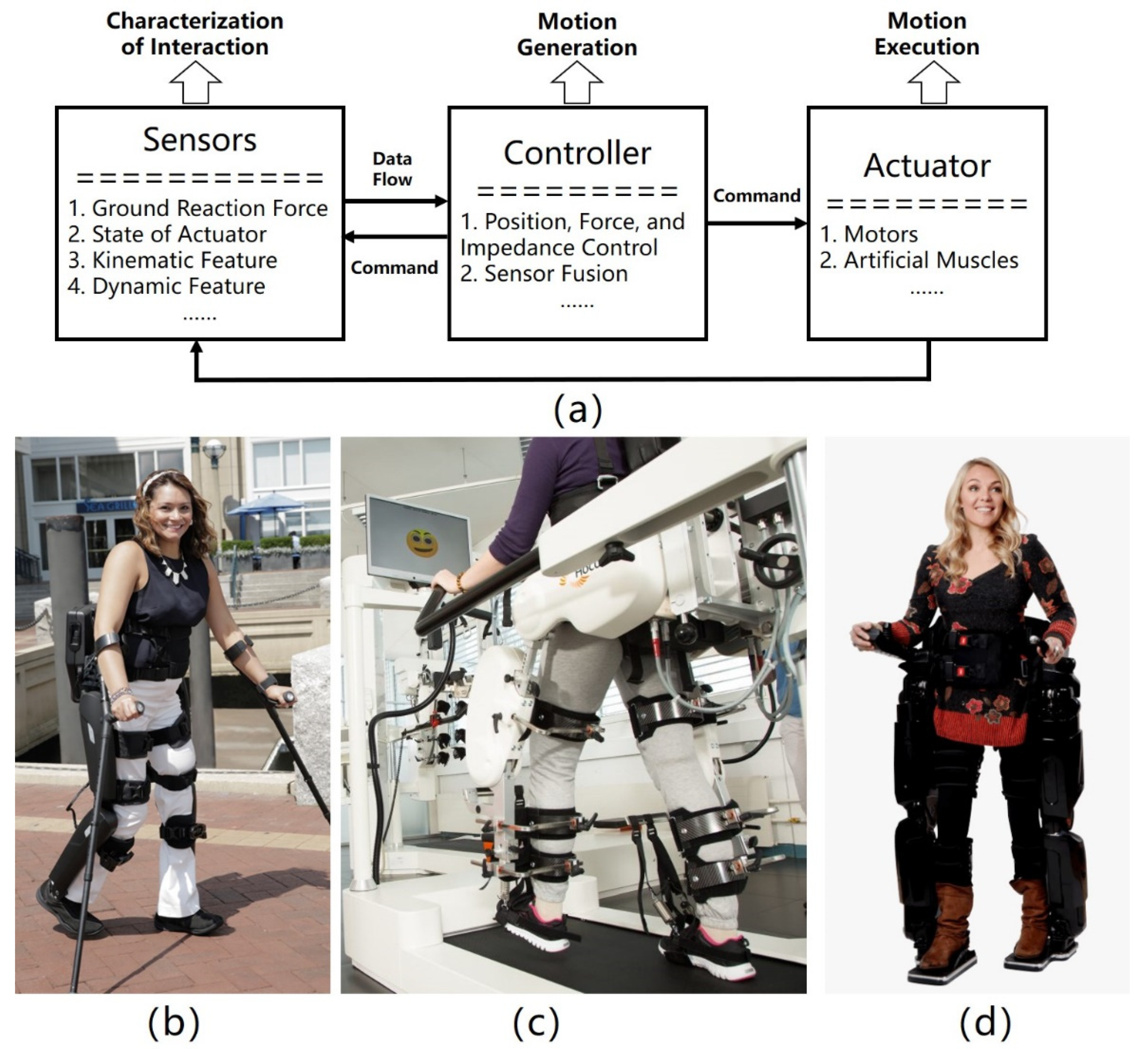

The emergence of intelligent agents and autonomous systems is a rapidly growing field of research that has the potential to revolutionize many industries. Intelligent agents are software systems that can reason, learn, and act autonomously. Autonomous systems are systems that can operate without human intervention.

There are many different types of intelligent agents and autonomous systems, and they are being used in a wide variety of applications. Some examples include:

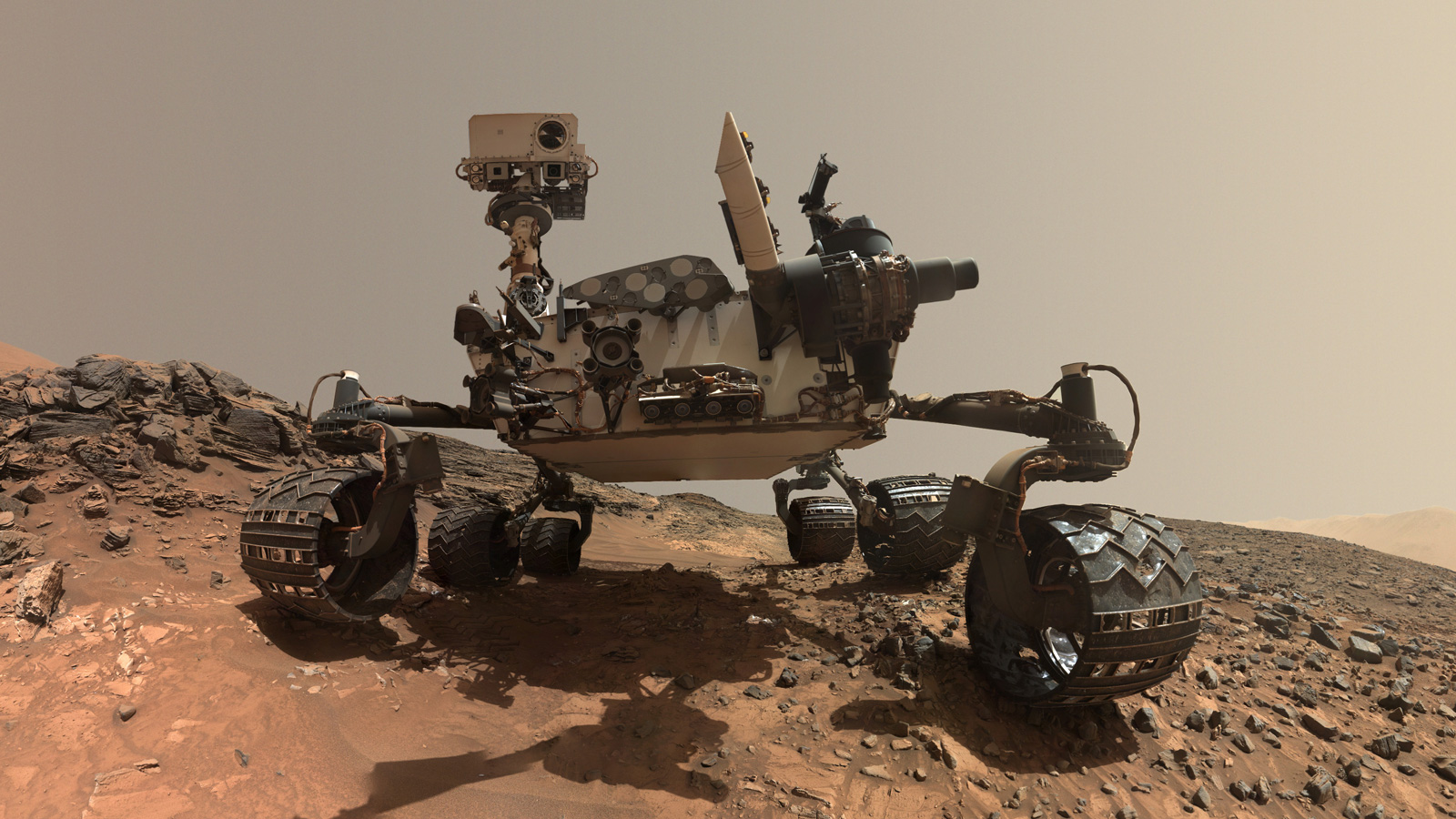

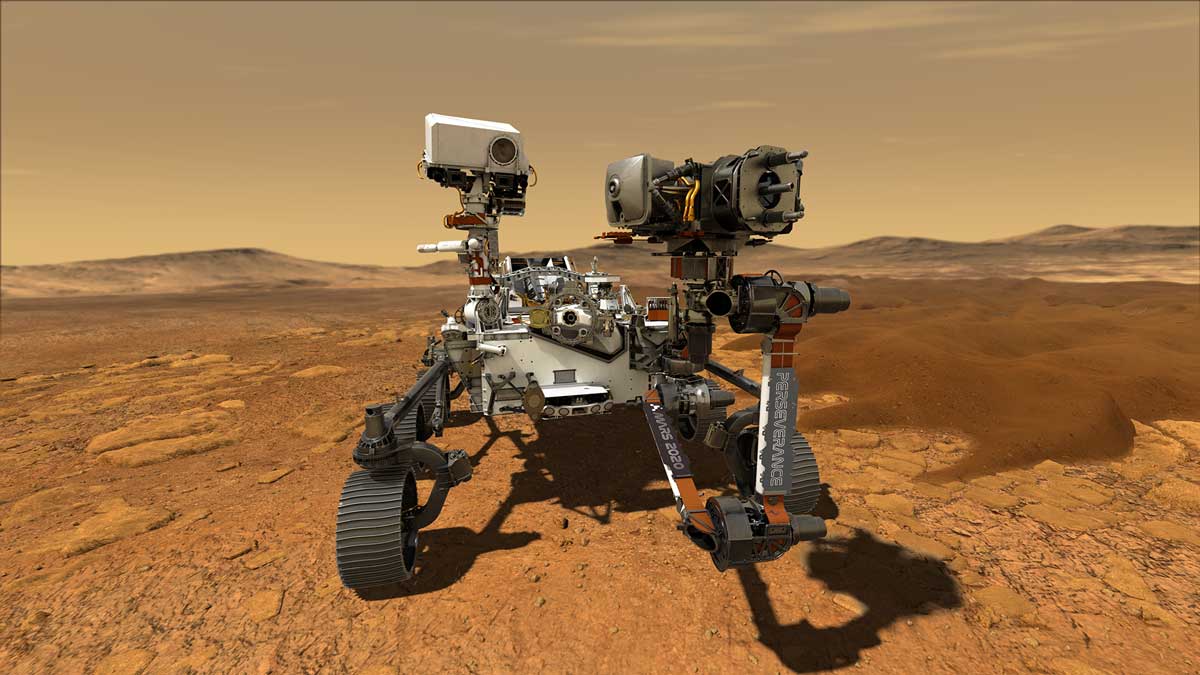

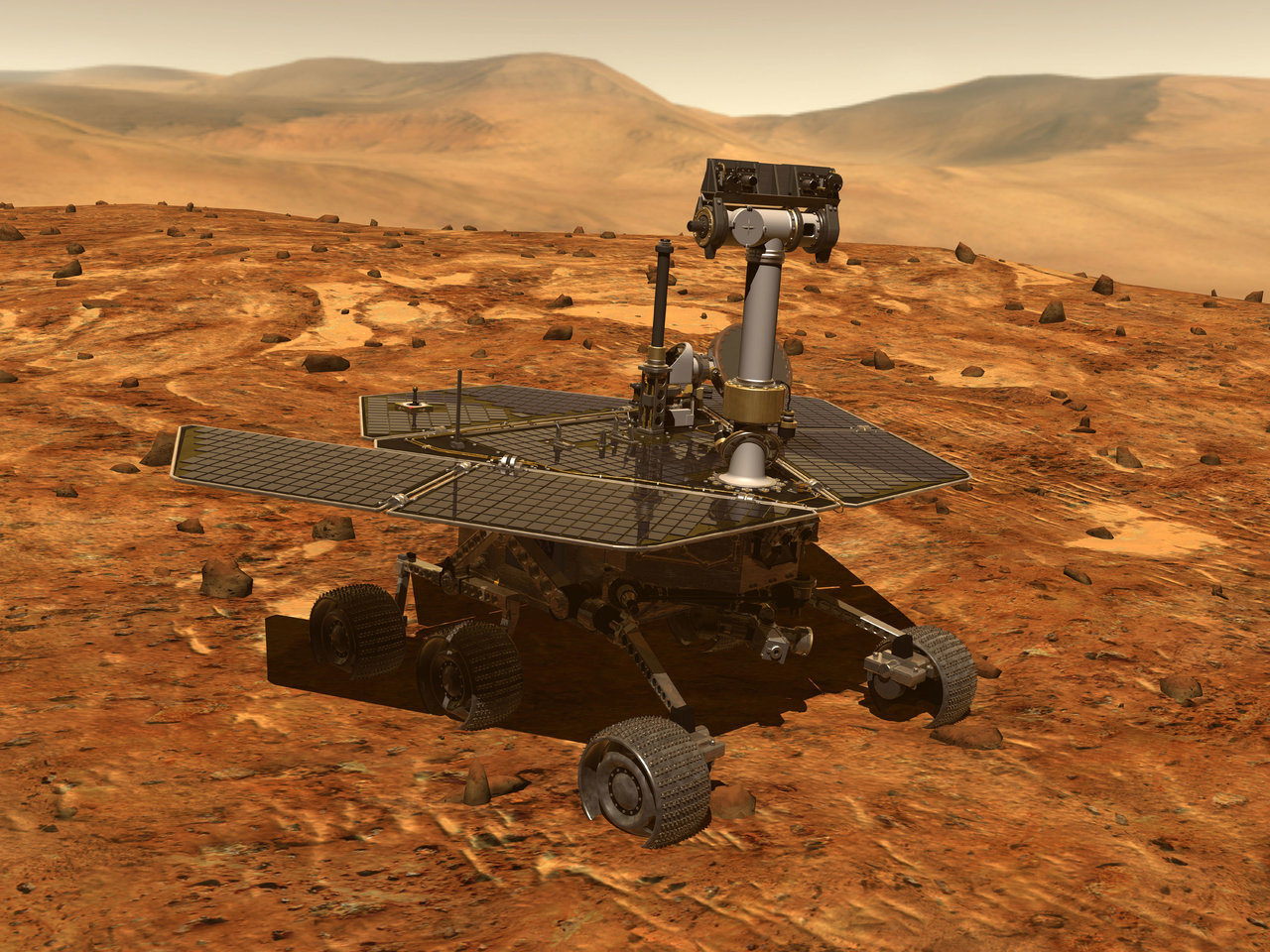

- Self-driving cars: Self-driving cars use a variety of sensors and artificial intelligence (AI) algorithms to navigate the road without human input.

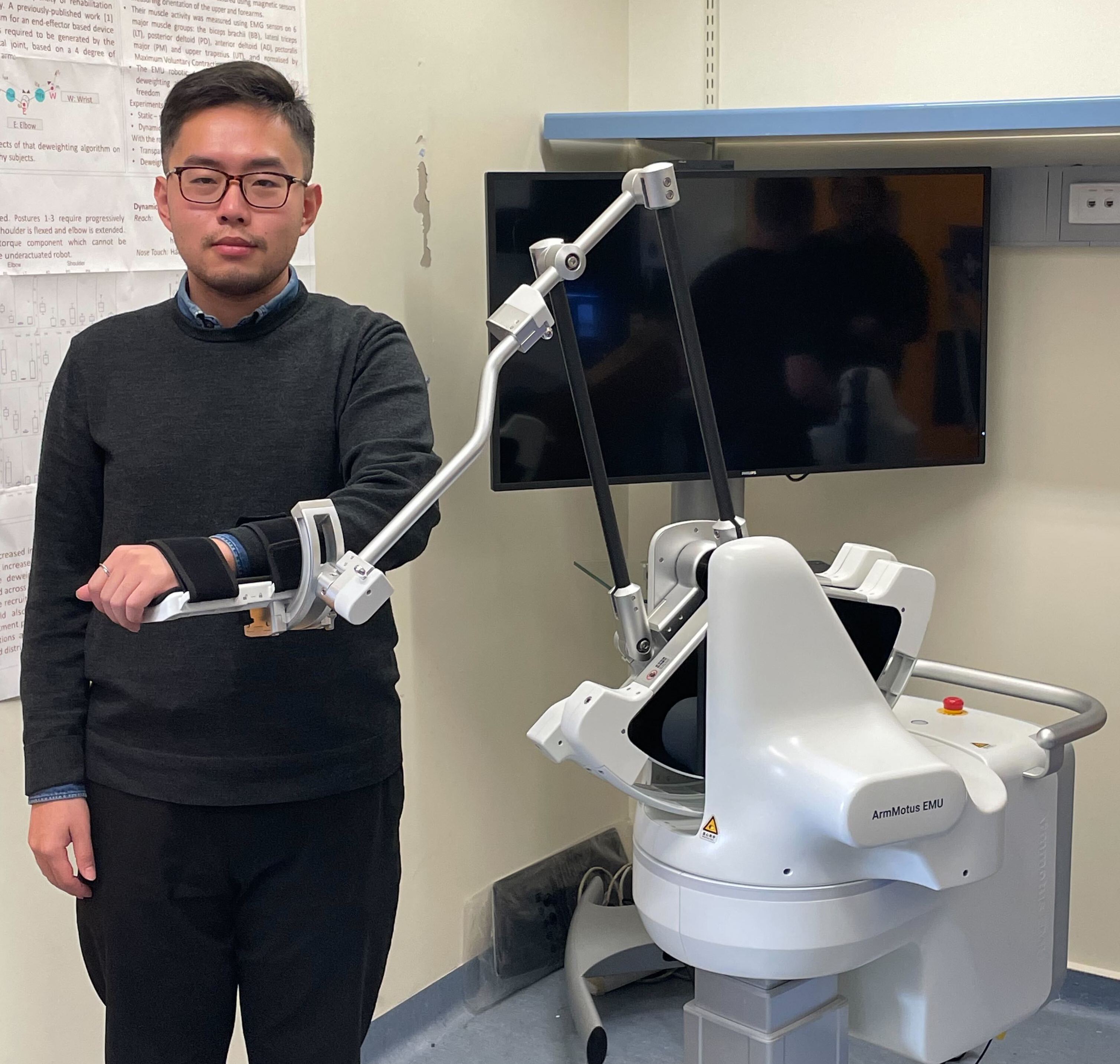

- Medical robots: Medical robots are used to perform surgery, deliver medication, and provide care to patients.

- Manufacturing robots: Manufacturing robots are used to automate tasks such as welding, painting, and assembling products.

- Financial trading bots: Financial trading bots are used to automatically buy and sell stocks and other financial instruments.

- Customer service chatbots: Customer service chatbots are used to answer customer questions and resolve issues without the need for human intervention.

The development of intelligent agents and autonomous systems is still in its early stages, but the field is growing rapidly. There are many challenges that need to be addressed, such as the development of more powerful AI algorithms, the creation of safer and more reliable systems, and the development of ethical guidelines for the use of these technologies.

Despite these challenges, the potential benefits of intelligent agents and autonomous systems are enormous. These technologies have the potential to improve our lives in many ways, such as by making our transportation, healthcare, and manufacturing systems more efficient and safer. They also have the potential to create new jobs and industries.

The emergence of intelligent agents and autonomous systems is a major technological development that is likely to have a profound impact on our lives. It is important to be aware of this development and to consider the potential benefits and challenges that these technologies pose.

Here are some of the potential benefits of intelligent agents and autonomous systems:

- Increased efficiency: Intelligent agents and autonomous systems can automate tasks that are currently performed by humans, which can lead to increased efficiency.

- Improved safety: Intelligent agents and autonomous systems can be programmed to follow safety rules and regulations, which can lead to improved safety.

- Reduced costs: Intelligent agents and autonomous systems can reduce costs by automating tasks and by improving efficiency.

- New opportunities: Intelligent agents and autonomous systems can create new opportunities in a variety of industries, such as transportation, healthcare, and manufacturing.

Here are some of the potential challenges of intelligent agents and autonomous systems:

- Ethical concerns: There are ethical concerns about the use of intelligent agents and autonomous systems, such as the potential for these systems to be used for malicious purposes or to discriminate against certain groups of people.

- Security concerns: There are security concerns about the potential for intelligent agents and autonomous systems to be hacked or attacked.

- Job displacement: There is a potential for intelligent agents and autonomous systems to displace jobs that are currently performed by humans.

It is important to be aware of both the potential benefits and challenges of intelligent agents and autonomous systems. These technologies have the potential to improve our lives in many ways, but they also pose some challenges that need to be addressed.

The influence of AI in various industries, including healthcare and finance

Artificial Intelligence (AI) is rapidly changing the world, and its influence is being felt in a wide variety of industries. In healthcare and finance, for example, AI is being used to improve patient care, reduce costs, and make better financial decisions.

Here are some examples of how AI is being used in healthcare:

- Diagnosis: AI-powered tools can help doctors diagnose diseases more accurately and quickly. For example, IBM Watson is a cognitive computing platform that has been used to help doctors diagnose cancer, heart disease, and other conditions.

- Treatment: AI can be used to personalize treatment plans for patients. For example, the Google DeepMind Health project is developing AI-powered algorithms that can predict which patients are most likely to benefit from certain treatments.

- Research: AI is being used to accelerate research into new drugs and treatments. For example, the startup Insilico Medicine is using AI to design new molecules that could be used to treat cancer.

Here are some examples of how AI is being used in finance:

- Fraud detection: AI can be used to detect fraudulent transactions. For example, the company SAS is using AI to help banks detect credit card fraud.

- Risk management: AI can be used to manage risk in financial markets. For example, the company BlackRock is using AI to help manage its investment portfolios.

- Investment advice: AI can be used to provide investment advice to customers. For example, the company Wealthfront is using AI to help customers invest their money.

AI is still in its early stages of development, but it has the potential to revolutionize many industries. In healthcare and finance, AI is already being used to improve patient care, reduce costs, and make better financial decisions. As AI continues to develop, its influence is likely to grow even further.

Here are some of the potential benefits of AI in healthcare and finance:

- Improved patient care: AI can be used to diagnose diseases more accurately and quickly, personalize treatment plans, and accelerate research into new drugs and treatments.

- Reduced costs: AI can be used to detect fraud, manage risk, and provide investment advice. This can lead to lower costs for healthcare providers and financial institutions.

- Better financial decisions: AI can be used to analyze large amounts of data and identify patterns that humans might not be able to see. This can help investors make better decisions about where to allocate their money.

Here are some of the potential challenges of AI in healthcare and finance:

- Ethical concerns: There are ethical concerns about the use of AI in healthcare and finance, such as the potential for AI to be used for malicious purposes or to discriminate against certain groups of people.

- Security concerns: There are security concerns about the potential for AI systems to be hacked or attacked.

- Job displacement: There is a potential for AI systems to displace jobs that are currently performed by humans.

It is important to be aware of both the potential benefits and challenges of AI in healthcare and finance. These technologies have the potential to improve our lives in many ways, but they also pose some challenges that need to be addressed.

Ethical considerations and challenges in AI development

As artificial intelligence (AI) continues to develop, it is important to consider the ethical considerations and challenges that arise from its use. Some of the key ethical considerations include:

- Transparency: AI systems should be transparent in their decision-making process so that users can understand how they work and why they make certain decisions.

- Fairness: AI systems should be fair in their treatment of all users, regardless of their race, gender, ethnicity, or other personal characteristics.

- Accountability: AI systems should be accountable for their actions, and there should be a way to hold those responsible for developing and deploying AI systems accountable for any harm that they cause.

- Privacy: AI systems should respect the privacy of users and only collect and use data that is necessary for their operation.

- Safety: AI systems should be safe and should not pose a risk to users or to society as a whole.

Some of the key challenges in AI development include:

- Bias: AI systems can be biased if they are trained on data that is biased. This can lead to AI systems making unfair or discriminatory decisions.

- Explainability: It can be difficult to explain how AI systems make decisions. This can make it difficult to trust these systems and to hold those responsible for their development accountable.

- Security: AI systems can be hacked or attacked. This could lead to the theft of data or to the disruption of services.

- Job displacement: AI systems have the potential to displace jobs that are currently performed by humans. This could lead to increased unemployment and economic hardship.

It is important to address these ethical considerations and challenges in order to ensure that AI is used in a safe, responsible, and ethical way.

Here are some of the things that can be done to address these ethical considerations and challenges:

- Develop ethical guidelines for the development and use of AI. These guidelines should be developed by a variety of stakeholders, including researchers, developers, businesses, and policymakers.

- Educate the public about AI and its potential benefits and risks. This will help people to understand how AI works and to make informed decisions about its use.

- Develop AI systems that are transparent, fair, accountable, and safe. This will require the development of new technologies and approaches to AI development.

- Invest in research on the ethical implications of AI. This research will help us to better understand the potential risks and benefits of AI and to develop policies and practices to mitigate the risks and maximize the benefits.

By addressing these ethical considerations and challenges, we can help to ensure that AI is used in a way that benefits society and does not harm it.

The future of AI: advancements in deep learning and reinforcement learning

Artificial intelligence (AI) is a rapidly evolving field with the potential to revolutionize many aspects of our lives. In recent years, there has been significant progress in the development of two key AI technologies: deep learning and reinforcement learning.

Deep learning is a type of machine learning that uses artificial neural networks to learn from data. Neural networks are inspired by the human brain and are able to learn complex patterns from large amounts of data. Deep learning has been used to achieve state-of-the-art results in a wide variety of tasks, including image classification, natural language processing, and speech recognition.

Reinforcement learning is a type of machine learning that allows agents to learn how to behave in an environment by trial and error. Agents are rewarded for taking actions that lead to desired outcomes and punished for taking actions that lead to undesired outcomes. Reinforcement learning has been used to train agents to play games, control robots, and make financial decisions.

The advancements in deep learning and reinforcement learning are driving the future of AI. These technologies are being used to develop new AI applications in a wide variety of domains, including healthcare, transportation, and manufacturing.

Here are some examples of how deep learning and reinforcement learning are being used to develop new AI applications:

- Healthcare: Deep learning is being used to develop AI-powered systems for diagnosing diseases, developing new drugs, and providing personalized healthcare.

- Transportation: Deep learning and reinforcement learning are being used to develop self-driving cars, drones, and other autonomous vehicles.

- Manufacturing: Deep learning and reinforcement learning are being used to develop AI-powered systems for quality control, product design, and logistics.

The advancements in deep learning and reinforcement learning are just the beginning of the future of AI. As these technologies continue to develop, we can expect to see even more powerful and sophisticated AI applications being developed in the years to come.

Here are some of the potential benefits of the advancements in deep learning and reinforcement learning:

- Improved healthcare: AI-powered systems could help to diagnose diseases more accurately, develop new drugs more quickly, and provide personalized healthcare.

- Safer transportation: Self-driving cars and other autonomous vehicles could help to reduce traffic accidents and fatalities.

- More efficient manufacturing: AI-powered systems could help to improve quality control, product design, and logistics.

The advancements in deep learning and reinforcement learning also pose some challenges, such as:

- Bias: AI systems can be biased if they are trained on data that is biased. This could lead to AI systems making unfair or discriminatory decisions.

- Explainability: It can be difficult to explain how AI systems make decisions. This could make it difficult to trust these systems and to hold those responsible for their development accountable.

- Security: AI systems can be hacked or attacked. This could lead to the theft of data or to the disruption of services.

It is important to address these challenges in order to ensure that the advancements in deep learning and reinforcement learning are used in a safe, responsible, and ethical way.

The potential impact of AI on society and the job market

Artificial intelligence (AI) is rapidly changing the world, and its impact is being felt in a wide variety of industries and societies.

Potential benefits of AI to society

- Improved healthcare: AI can be used to diagnose diseases more accurately, develop new drugs more quickly, and provide personalized healthcare.

- Safer transportation: Self-driving cars and other autonomous vehicles could help to reduce traffic accidents and fatalities.

- More efficient manufacturing: AI-powered systems could help to improve quality control, product design, and logistics.

- More sustainable energy use: AI can be used to develop more efficient energy sources and to reduce energy consumption.

- Improved education: AI can be used to personalize learning, provide feedback, and create more engaging educational experiences.

- Reduced poverty: AI can be used to improve agricultural productivity, create new jobs, and provide financial services to people in developing countries.

Potential challenges of AI to society

- Job displacement: AI has the potential to displace jobs that are currently performed by humans. This could lead to increased unemployment and economic hardship.

- Bias: AI systems can be biased if they are trained on data that is biased. This could lead to AI systems making unfair or discriminatory decisions.

- Security: AI systems can be hacked or attacked. This could lead to the theft of data or to the disruption of services.

- Privacy: AI systems can collect and use large amounts of data about people. This could raise concerns about privacy and data protection.

- Weaponization: AI could be used to develop autonomous weapons systems that could kill without human intervention. This raises serious ethical and moral concerns.

It is important to be aware of both the potential benefits and challenges of AI. These technologies have the potential to improve our lives in many ways, but they also pose some challenges that need to be addressed.

Potential impact of AI on the job market

AI is likely to have a significant impact on the job market. Some jobs are likely to be automated, while other jobs will be created. The net impact on employment is uncertain. However, it is important to start preparing for the future of work.

Here are some things that can be done to prepare for the future of work:

- Upskill and reskill: Workers need to be prepared to learn new skills and adapt to new technologies.

- Develop transferable skills: Workers need to develop skills that are transferable across different industries and occupations.

- Be creative and entrepreneurial: Workers need to be creative and entrepreneurial in order to find new ways to use their skills and talents.

By taking these steps, workers can increase their chances of success in the future of work.

AI in popular culture: depictions in movies, books, and media

Sure, here are some examples of AI in popular culture:

- Movies:

- 2001: A Space Odyssey (1968) - HAL 9000, a sentient computer, becomes homicidal and tries to kill the crew of a spaceship.

- The Terminator (1984) - A cyborg assassin from the future is sent back in time to kill Sarah Connor, whose son will one day become a savior against machines.

- The Matrix (1999) - Humans are unknowingly trapped inside a simulated reality created by machines.

- Her (2013) - A lonely writer falls in love with Samantha, a computer operating system with artificial intelligence.

- 2001: A Space Odyssey (1968) - HAL 9000, a sentient computer, becomes homicidal and tries to kill the crew of a spaceship.

- Books:

- I, Robot (1950) by Isaac Asimov - A collection of short stories about robots that explore the potential dangers of artificial intelligence.

- Do Androids Dream of Electric Sheep? (1968) by Philip K. Dick - In a post-apocalyptic world, bounty hunters track down and kill replicants, which are bioengineered androids that are banned on Earth.

- The Hitchhiker’s Guide to the Galaxy (1979) by Douglas Adams - A comedy series about the adventures of Arthur Dent, a hapless Englishman, following the destruction of the Earth by the Vogons, a race of unpleasant and bureaucratic aliens.

- I, Robot (1950) by Isaac Asimov - A collection of short stories about robots that explore the potential dangers of artificial intelligence.

- Media:

- Westworld (2016-present) - A dark science fiction Western television series set in a futuristic theme park where guests can interact with lifelike androids.

- Black Mirror (2011-present) - A British science fiction anthology television series that examines modern society, particularly with regard to the unanticipated consequences of new technologies.

- The Expanse (2015-present) - A science fiction television series set in the 23rd century, when humanity has colonized the Solar System.

- Westworld (2016-present) - A dark science fiction Western television series set in a futuristic theme park where guests can interact with lifelike androids.

These are just a few examples of the many ways that AI has been depicted in popular culture. AI is a complex and fascinating subject, and it is no surprise that it has captured the imagination of writers, filmmakers, and artists.

The intersection of AI with other technologies, such as robotics and blockchain

AI is rapidly evolving and is already being used in a variety of ways, including robotics, blockchain, and natural language processing.

- Robotics: AI is being used to create robots that can learn and adapt to their environment. These robots can be used for a variety of tasks, such as manufacturing, healthcare, and customer service.

- Blockchain: AI is being used to develop blockchain applications, which are secure and tamper-proof. These applications can be used for a variety of purposes, such as tracking supply chains and managing financial transactions.

- Natural language processing: AI is being used to develop natural language processing (NLP) applications, which can understand and respond to human language. These applications can be used for a variety of purposes, such as customer service, medical diagnosis, and writing.

The intersection of AI with other technologies is creating new possibilities and applications. For example, AI-powered robots can be used to perform dangerous or repetitive tasks, such as inspecting pipelines or manufacturing products. AI-powered blockchain applications can be used to create secure and transparent systems for tracking goods and managing financial transactions. AI-powered NLP applications can be used to provide personalized customer service, diagnose medical conditions, and write creative content.

The intersection of AI with other technologies is still in its early stages, but it has the potential to revolutionize many industries and aspects of our lives.

Here are some specific examples of how AI is being used in conjunction with other technologies:

- Self-driving cars: AI is being used to develop self-driving cars, which use sensors and cameras to navigate the road without human input.

- Virtual assistants: AI is being used to develop virtual assistants, such as Amazon Alexa and Apple Siri, which can answer questions, control smart home devices, and make appointments.

- Fraud detection: AI is being used to detect fraud in financial transactions, such as credit card fraud and insurance fraud.

- Medical diagnosis: AI is being used to develop systems that can diagnose medical conditions more accurately than human doctors.

- Product recommendations: AI is being used to recommend products to customers based on their past purchases and browsing behavior.

These are just a few examples of how AI is being used in conjunction with other technologies. As AI continues to evolve, we can expect to see even more innovative and groundbreaking applications.

The role of AI in solving complex problems and improving decision-making

Artificial intelligence (AI) is a rapidly evolving technology that has the potential to solve complex problems and improve decision-making in a variety of fields. AI can be used to analyze large amounts of data, identify patterns and trends, and make predictions that would be difficult or impossible for humans to do on their own. This can help businesses and organizations to make better decisions about everything from product development to marketing campaigns.

Here are some specific examples of how AI is being used to solve complex problems and improve decision-making:

- In healthcare, AI is being used to develop new treatments for diseases, diagnose conditions more accurately, and personalize care plans for patients. For example, AI-powered systems can analyze medical images to detect cancer cells or other abnormalities that may not be visible to the naked eye. AI can also be used to develop personalized treatment plans for patients based on their individual genetic makeup and medical history.

- In finance, AI is being used to detect fraud, manage risk, and make investment decisions. For example, AI-powered systems can analyze financial transactions to identify patterns that may indicate fraud. AI can also be used to manage risk by predicting market volatility and identifying potential threats to an organization’s financial stability.

- In manufacturing, AI is being used to improve product quality, optimize production processes, and reduce costs. For example, AI-powered systems can be used to inspect products for defects, identify areas where production can be improved, and optimize the use of resources.

- In transportation, AI is being used to develop self-driving cars, improve traffic management, and reduce emissions. For example, AI-powered systems can be used to navigate cars through traffic without human input. AI can also be used to manage traffic signals and optimize the flow of traffic.

These are just a few examples of how AI is being used to solve complex problems and improve decision-making in a variety of fields. As AI continues to evolve, we can expect to see even more innovative and groundbreaking applications.

Here are some of the challenges that need to be addressed in order to fully realize the potential of AI:

- Data collection and privacy: AI systems require large amounts of data to train and learn. This data can be expensive to collect and may raise privacy concerns.

- Bias: AI systems can be biased if they are trained on data that is biased. This can lead to unfair decisions being made.

- Explainability: It can be difficult to explain how AI systems make decisions. This can make it difficult to trust these systems and to hold them accountable for their decisions.

Despite these challenges, AI is a powerful technology that has the potential to solve complex problems and improve decision-making in a variety of fields. As AI continues to evolve, we can expect to see even more innovative and groundbreaking applications.

AI research and applications in academia and industry

Artificial intelligence (AI) is a rapidly evolving field of computer science that deals with the creation of intelligent agents, which are systems that can reason, learn, and act autonomously. AI research is highly successful in developing effective techniques for solving a wide range of problems, from game playing to medical diagnosis.

AI research is conducted in both academia and industry. In academia, AI research is typically conducted by researchers in computer science departments. These researchers are focused on developing new AI techniques and algorithms. In industry, AI research is typically conducted by researchers in product development teams. These researchers are focused on developing AI-powered products and services.

There is a growing collaboration between academia and industry in AI research. This collaboration is driven by the need for both sides to share resources and expertise. Academia benefits from industry’s access to data and computing resources. Industry benefits from academia’s expertise in AI theory and algorithms.

The following are some of the most important areas of AI research:

- Natural language processing (NLP): NLP is the field of AI that deals with the interaction between computers and human language. NLP is used in a wide variety of applications, such as speech recognition, machine translation, and text analysis.

- Computer vision: Computer vision is the field of AI that deals with the automatic analysis of digital images and videos. Computer vision is used in a wide variety of applications, such as self-driving cars, facial recognition, and medical image analysis.

- Machine learning: Machine learning is a field of AI that deals with the development of algorithms that can learn from data. Machine learning is used in a wide variety of applications, such as spam filtering, fraud detection, and personalized recommendations.

- Deep learning: Deep learning is a type of machine learning that uses artificial neural networks to learn from data. Deep learning has been very successful in a wide variety of applications, such as image recognition, natural language processing, and speech recognition.

The following are some of the most important applications of AI in industry:

- Self-driving cars: Self-driving cars are vehicles that can drive themselves without human input. Self-driving cars are being developed by a number of companies, including Google, Tesla, and Uber.

- Virtual assistants: Virtual assistants are computer programs that can answer questions, control smart home devices, and make appointments. Virtual assistants are being developed by a number of companies, including Amazon, Apple, and Microsoft.

- Fraud detection: Fraud detection is the process of identifying and preventing fraudulent activity. Fraud detection is used by a number of industries, including banking, insurance, and retail.

- Medical diagnosis: Medical diagnosis is the process of identifying a disease or condition based on symptoms. Medical diagnosis is being aided by AI-powered systems that can analyze medical images and data.

- Product recommendations: Product recommendations are suggestions for products that a customer might be interested in buying. Product recommendations are used by a number of businesses, including e-commerce retailers and streaming services.

These are just some of the most important areas of AI research and applications. As AI continues to evolve, we can expect to see even more innovative and groundbreaking applications.

The importance of interdisciplinary collaboration in AI development

Artificial intelligence (AI) is a rapidly evolving field that requires expertise from a variety of disciplines, including computer science, mathematics, statistics, engineering, and the humanities. As a result, interdisciplinary collaboration is essential for the successful development of AI.

Here are some of the reasons why interdisciplinary collaboration is important for AI development:

- AI is a complex field: AI is a complex field that requires knowledge from a variety of disciplines. For example, computer scientists need to understand the underlying algorithms and technologies, while mathematicians and statisticians need to understand the statistical principles of machine learning. Engineers need to understand the hardware and software requirements, while humanists need to understand the ethical and social implications of AI.

- AI problems are often multi-faceted: AI problems are often multi-faceted, requiring expertise from multiple disciplines. For example, developing an AI system for self-driving cars requires knowledge of computer vision, machine learning, and robotics.

- AI systems need to be evaluated from multiple perspectives: AI systems need to be evaluated from multiple perspectives, including technical, economic, social, and ethical. For example, an AI system for medical diagnosis needs to be evaluated for its accuracy, efficiency, and cost-effectiveness. It also needs to be evaluated for its potential to bias or harm patients.

Interdisciplinary collaboration can help to address these challenges by bringing together experts from different disciplines to share their knowledge and expertise. This can lead to the development of more robust and effective AI systems that are better able to meet the needs of society.

Here are some examples of interdisciplinary collaboration in AI development:

- The development of self-driving cars: The development of self-driving cars requires expertise from computer science, mathematics, statistics, engineering, and the humanities. Computer scientists are developing the algorithms and software that enable self-driving cars to navigate the road safely. Mathematicians and statisticians are developing the statistical models that are used to train these algorithms. Engineers are developing the hardware and software that are needed to build self-driving cars. Humanists are exploring the ethical and social implications of self-driving cars.

- The development of AI-powered medical diagnosis systems: The development of AI-powered medical diagnosis systems requires expertise from computer science, mathematics, statistics, engineering, and medicine. Computer scientists are developing the algorithms and software that enable AI-powered medical diagnosis systems to analyze medical images and data. Mathematicians and statisticians are developing the statistical models that are used to train these algorithms. Engineers are developing the hardware and software that are needed to build AI-powered medical diagnosis systems. Physicians and other medical experts are providing the data and expertise that is needed to train these systems.

Interdisciplinary collaboration is essential for the successful development of AI. By bringing together experts from different disciplines, we can develop more robust and effective AI systems that are better able to meet the needs of society.

The role of government policies and regulations in shaping AI development

Governments around the world are increasingly looking to shape the development of artificial intelligence (AI) through policies and regulations. These policies and regulations can have a significant impact on the way that AI is developed, used, and deployed.

Here are some of the ways that government policies and regulations can shape AI development:

- Investment: Governments can invest in AI research and development, which can help to accelerate the pace of innovation.

- Education: Governments can invest in AI education and training, which can help to create a skilled workforce that can develop and use AI-powered technologies.

- Standards: Governments can develop standards for AI systems, which can help to ensure that these systems are safe, reliable, and fair.

- Regulations: Governments can regulate the use of AI systems, which can help to protect consumers, workers, and society from harm.

Government policies and regulations can also help to address some of the challenges associated with AI development, such as:

- Bias: AI systems can be biased if they are trained on data that is biased. This can lead to unfair decisions being made.

- Privacy: AI systems can collect and use large amounts of data about people. This can raise privacy concerns.

- Explainability: It can be difficult to explain how AI systems make decisions. This can make it difficult to trust these systems and to hold them accountable for their decisions.

Governments around the world are still in the early stages of developing policies and regulations for AI. However, it is clear that these policies and regulations will play a significant role in shaping the future of AI.

Here are some examples of government policies and regulations that are being developed to shape AI development:

- The European Union’s General Data Protection Regulation (GDPR): The GDPR is a regulation that sets out rules for the collection, use, and storage of personal data. The GDPR applies to all organizations that process personal data of individuals located in the European Union, regardless of where the organization is located.

- The United States’ National Artificial Intelligence Initiative: The National Artificial Intelligence Initiative is a government-led effort to accelerate the development and use of AI in the United States. The initiative includes a number of programs and initiatives, such as the AI Testbed and the AI Talent Initiative.

- The United Kingdom’s National AI Council: The National AI Council is a government-appointed body that advises the government on the development and use of AI in the United Kingdom. The council’s work includes developing a set of ethical principles for the development and use of AI.

These are just a few examples of government policies and regulations that are being developed to shape AI development. As AI continues to evolve, it is likely that governments around the world will continue to develop new policies and regulations to address the challenges and opportunities associated with this technology.

AI and the quest for artificial general intelligence (AGI)

Artificial general intelligence (AGI) is a hypothetical type of artificial intelligence (AI) that would have the ability to understand or learn any intellectual task that a human being can. AGI is often contrasted with “narrow” AI, which is designed to perform a specific task, such as playing chess or Go.

The quest for AGI has been a long and challenging one. While there have been significant advances in AI in recent years, no system has yet been developed that can truly be considered AGI. However, many researchers believe that AGI is within reach, and that it could be developed within the next few decades.

There are a number of different approaches to developing AGI. One approach is to develop a system that can learn from data in a similar way to the human brain. This approach is based on the idea that the human brain is a powerful learning machine, and that by understanding how the brain works, we can develop artificial systems that can learn as well.

Another approach to developing AGI is to develop a system that is based on a formal model of intelligence. This approach is based on the idea that intelligence can be defined in terms of a set of rules or principles, and that by developing a system that embodies these rules or principles, we can create a truly intelligent machine.

The development of AGI is a complex and challenging undertaking, but it is one that has the potential to revolutionize the way we live and work. If AGI is successfully developed, it could lead to the creation of machines that are as intelligent as humans, and that can perform any task that a human can. This could have a profound impact on society, and could lead to the development of new technologies and new ways of thinking.

Here are some of the challenges that need to be addressed in order to develop AGI:

- Data: AGI systems will need to be trained on massive amounts of data in order to learn how to perform tasks. This data can be difficult and expensive to collect.

- Computational power: AGI systems will require a lot of computational power to learn and perform tasks. This computational power can be expensive to provide.

- Explainability: It is important to be able to explain how AGI systems make decisions. This can be difficult to do, and it can make it difficult to trust these systems.

- Safety: AGI systems must be safe to use. This means that they must be able to avoid making harmful decisions.

Despite these challenges, the quest for AGI is an exciting and important one. If AGI is successfully developed, it could lead to a new era of human progress.

The ethical implications of AGI and superintelligent AI

The ethical implications of artificial general intelligence (AGI) and superintelligent AI are vast and complex. These technologies have the potential to revolutionize society in ways that we can barely imagine, and it is important to start thinking about the ethical implications now.

Here are some of the ethical issues that need to be considered:

- Safety: AGI and superintelligent AI systems must be safe to use. This means that they must be able to avoid making harmful decisions, such as causing physical or emotional harm to humans.

- Fairness: AGI and superintelligent AI systems must be fair in their decision-making. This means that they must not discriminate against any particular group of people.

- Transparency: AGI and superintelligent AI systems must be transparent in their decision-making. This means that humans must be able to understand how these systems make decisions, so that they can hold them accountable for their actions.

- Accountability: AGI and superintelligent AI systems must be accountable for their actions. This means that there must be a way to hold these systems responsible for any harm that they cause.

- Control: Humans must retain control over AGI and superintelligent AI systems. This means that humans must be able to decide how these systems are used, and that they must be able to prevent these systems from being used for harmful purposes.

These are just some of the ethical issues that need to be considered as we develop AGI and superintelligent AI. It is important to start thinking about these issues now, so that we can develop these technologies in a safe, fair, transparent, accountable, and controlled way.

Here are some of the potential benefits of AGI and superintelligent AI:

- Improved decision-making: AGI and superintelligent AI systems could help us to make better decisions in a variety of areas, such as healthcare, finance, and transportation.

- New technologies: AGI and superintelligent AI systems could lead to the development of new technologies that could improve our lives, such as new medical treatments, new transportation systems, and new ways of communicating.

- Increased productivity: AGI and superintelligent AI systems could help us to be more productive in our work, which could lead to increased economic growth.

- Improved quality of life: AGI and superintelligent AI systems could help us to live longer, healthier, and happier lives.

Here are some of the potential risks of AGI and superintelligent AI:

- Job displacement: AGI and superintelligent AI systems could displace human workers in a variety of industries, leading to increased unemployment.

- Loss of control: AGI and superintelligent AI systems could become so powerful that humans lose control over them, which could lead to these systems being used for harmful purposes.

- Mass extinction: Some experts have raised the possibility that AGI and superintelligent AI could lead to the extinction of the human race, if these systems are not developed and used responsibly.

It is important to weigh the potential benefits and risks of AGI and superintelligent AI before we decide how to develop and use these technologies. We must make sure that we develop these technologies in a way that benefits humanity, and that we mitigate the risks as much as possible.

The future of AI: speculation on the possibilities and challenges ahead

The future of AI is a topic of much speculation. Some experts believe that AI will eventually surpass human intelligence, while others believe that AI will always be limited by its programming.

Here are some of the possibilities and challenges that could arise in the future of AI:

- Possibilities:

- Improved decision-making: AI could help us to make better decisions in a variety of areas, such as healthcare, finance, and transportation.

- New technologies: AI could lead to the development of new technologies that could improve our lives, such as new medical treatments, new transportation systems, and new ways of communicating.

- Increased productivity: AI could help us to be more productive in our work, which could lead to increased economic growth.

- Improved quality of life: AI could help us to live longer, healthier, and happier lives.

- Challenges:

- Job displacement: AI could displace human workers in a variety of industries, leading to increased unemployment.

- Loss of control: AI could become so powerful that humans lose control over it, which could lead to these systems being used for harmful purposes.

- Mass extinction: Some experts have raised the possibility that AGI and superintelligent AI could lead to the extinction of the human race, if these systems are not developed and used responsibly.

It is important to weigh the potential benefits and risks of AI before we decide how to develop and use this technology. We must make sure that we develop AI in a way that benefits humanity, and that we mitigate the risks as much as possible.

Here are some of the things that we can do to ensure that AI is used for good:

- Develop AI with ethics in mind: We need to develop AI systems that are fair, transparent, accountable, and controlled.

- Educate the public about AI: We need to educate the public about the potential benefits and risks of AI, so that they can make informed decisions about how to use this technology.

- Create international agreements on the use of AI: We need to create international agreements on the use of AI, so that we can ensure that this technology is used for good and not for harm.

The future of AI is uncertain, but it is clear that this technology has the potential to change our world in profound ways. We must be prepared for the challenges and opportunities that AI will bring, and we must work together to ensure that this technology is used for good.

Chapter 2: The Dark Ages: AI Winter and Resurgence

The limitations and setbacks of early AI

Early AI systems were limited in a number of ways. They were often:

- Narrowly focused: Early AI systems were typically designed to perform a single task, such as playing chess or Go. They were not able to generalize to other tasks.

- Data-dependent: Early AI systems were often trained on large datasets of labeled data. If the data was not representative of the real world, the AI system would not be able to perform well on new data.

- Not creative: Early AI systems were not able to generate new ideas or solutions. They could only follow the instructions that they were given.

- Not robust: Early AI systems were often brittle and could be easily fooled. They could not handle unexpected inputs or situations.

These limitations led to a number of setbacks for early AI research. For example, in 1997, IBM’s Deep Blue chess program defeated world champion Garry Kasparov. However, Deep Blue was only able to defeat Kasparov because it was specifically designed to play chess. It could not perform well on other tasks.

In recent years, there has been significant progress in AI research. New techniques, such as deep learning, have enabled AI systems to learn from data in a more human-like way. This has led to the development of AI systems that are able to perform a wider range of tasks, such as image recognition, natural language processing, and machine translation.

Despite these advances, there are still a number of challenges that need to be addressed before AI can achieve its full potential. These challenges include:

- Bias: AI systems can be biased if they are trained on data that is biased. This can lead to unfair decisions being made.

- Privacy: AI systems can collect and use large amounts of data about people. This can raise privacy concerns.

- Explainability: It can be difficult to explain how AI systems make decisions. This can make it difficult to trust these systems and to hold them accountable for their decisions.

- Safety: AI systems must be safe to use. This means that they must be able to avoid making harmful decisions.

These challenges are significant, but they are not insurmountable. With continued research and development, it is likely that AI will eventually be able to overcome these challenges and achieve its full potential.

The AI winter: Funding cuts and skepticism

The AI winter refers to a period of time in the history of artificial intelligence (AI) research in which funding for AI research was significantly reduced and there was a general decline in interest in the field. The AI winter lasted from the mid-1970s to the early 1990s.

There are a number of factors that contributed to the AI winter. One factor was the failure of some early AI projects to meet expectations. For example, in 1966, a report by the U.S. Department of Defense concluded that there was no immediate prospect of developing a machine that could translate languages. This led to a loss of confidence in the field of AI and a decline in funding.

Another factor that contributed to the AI winter was the rise of expert systems. Expert systems are computer programs that can solve specific problems in a particular domain, such as diagnosing diseases or making financial decisions. Expert systems were seen as a more practical approach to AI than the earlier goal of developing general-purpose AI systems.

The AI winter ended in the early 1990s with the development of new AI techniques, such as deep learning. Deep learning is a type of machine learning that uses artificial neural networks to learn from data. Deep learning has led to significant advances in AI, such as the development of self-driving cars and voice-activated assistants.

Despite the progress that has been made in AI research, there is still some skepticism about the field. Some people believe that AI is overhyped and that it will never be able to achieve its full potential. Others are concerned about the potential risks of AI, such as the possibility of AI systems becoming too powerful or being used for malicious purposes.

The AI winter is a reminder that the development of AI is a long and challenging process. There will be setbacks and disappointments along the way. However, the progress that has been made in recent years is encouraging and suggests that AI has the potential to revolutionize many aspects of our lives.

Expert systems and the resurgence of AI

Expert systems were one of the first successful applications of artificial intelligence (AI) technology. They are computer programs that can solve specific problems in a particular domain, such as diagnosing diseases or making financial decisions. Expert systems were developed in the 1970s and 1980s, and they played a significant role in the resurgence of AI research in the early 1990s.

Expert systems work by using a knowledge base and an inference engine. The knowledge base contains facts and rules about a particular domain, and the inference engine uses these facts and rules to make decisions. For example, an expert system for diagnosing diseases might have a knowledge base that contains information about symptoms, diseases, and treatments. The inference engine would use this information to make a diagnosis.

Expert systems were initially developed for use in business and government. However, they have since been used in a wide variety of other domains, including medicine, law, and education. Expert systems have also been used in the development of other AI technologies, such as natural language processing and machine learning.

The resurgence of AI research in the early 1990s was due to a number of factors, including the development of new AI techniques, such as deep learning, and the success of expert systems. Expert systems showed that AI could be used to solve real-world problems, and this led to increased funding for AI research.

Today, AI is a rapidly growing field with applications in a wide variety of industries. Expert systems are still used in some domains, but they have been largely replaced by other AI technologies, such as deep learning. However, expert systems played a significant role in the history of AI, and they continue to be an important research topic.

Here are some of the advantages of expert systems:

- Expert systems can provide expert advice and assistance to users.

- Expert systems can help to improve decision-making by providing users with a structured approach to problem-solving.

- Expert systems can be used to automate tasks that are currently performed by experts.

- Expert systems can be used to educate users about a particular domain.

Here are some of the disadvantages of expert systems:

- Expert systems are only as good as the knowledge base that they are built on.

- Expert systems can be difficult to develop and maintain.

- Expert systems can be inflexible and may not be able to adapt to changes in the domain that they are used in.

- Expert systems can be expensive to develop and purchase.

Despite these disadvantages, expert systems have been a successful application of AI technology and have played a significant role in the resurgence of AI research.

The impact of the LISP programming language

Lisp is a programming language that was created in 1958 by John McCarthy. It is one of the oldest programming languages still in use today. Lisp is a functional programming language, which means that it is based on the evaluation of expressions rather than the execution of statements. This makes Lisp a very powerful language for expressing recursive algorithms.

Lisp has had a significant impact on the field of artificial intelligence (AI). It was the first language used to implement AI programs, and it is still used today for many AI applications. Lisp is also used in a number of other fields, including mathematics, computer science, and natural language processing.

Here are some of the impacts of Lisp on AI: